Many teams I worked with seem to prefer UI level automation, or think that such level of testing is necessary to prove the required business functionality. Almost all of them have realised six to nine months after starting this effort that the cost of maintaining UI level tests is higher than the benefit they bring. Many have thrown away the tests at that point and effectively lost all the effort they put into them. If you have to do UI test automation (which I’d challenge in the first place), here is how do go about doing it so that the cost of maintenance doesn’t kill you later.

Three levels of UI test automation

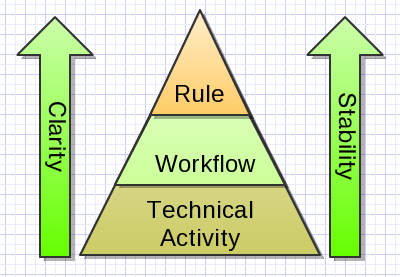

A very good idea when designing UI level functional tests is to think about describing the test and the automation at these three levels:

- Business rule/functionality level: what is this test demonstrating or exercising. For example: Free delivery is offered to customers who order two or more books.

- User interface workflow level: what does a user have to do to exercise the functionality through the UI, on a higher activity level. For example, put two books in a shopping cart, enter address details, verify that delivery options include free delivery.

- Technical activity level: what are the technical steps required to exercise the functionality. For example, open the shop homepage, log in with “testuser” and “testpassword”, go to the “/book” page, click on the first image with the “book” CSS class, wait for page to load, click on the “Buy now” link… and so on.

At the point where they figured out that UI testing is not paying off, most teams I interviewed were describing tests at the technical level only (an extreme case of this are recorded test scripts, where even the third level isn’t human readable). Such tests are very brittle, and many of them tend to break with even the smallest change in the UI. The third level is quite verbose as well, so it is often hard to understand what is broken when a test fails. Some teams were describing tests at the workflow level, which was a bit more stable. These tests weren’t bound to a particular layout, but they were bound to user interface implementation. When the page workflow changes, or when the underlying technology changes, such tests break.

Before anyone starts writing an angry comment about the technical level being the only thing that works, I want to say: Yes, we do need the third level. It is where the automation really happens and where the test exercises our web site. But there are serious benefits to not having only the third level.

The stability in acceptance tests comes from the fact that business rules don’t change as much as technical implementations. Technology moves much faster than business. The closer your acceptance tests are to the business rules, the more stable they are. Note that this doesn’t necessarily mean that these tests won’t be executed through the user interface - just that they are defined in a way that is not bound to a particular user interface.

The idea of thinking about these different levels is good because it allows us to write UI-level tests that are easy to understand, efficient to write and relatively inexpensive to maintain. This is because there is a natural hierarchy of concepts on these three levels. Checking that delivery is available for two books involves putting a book in a shopping cart. Putting a book in a shopping cart involves a sequence of technical steps. Entering address details does as well. Breaking things down like that and combining lower level concepts into higher level concepts reduces the cognitive load and promotes reuse.

Easy to understand

From the bottom up, the clarity of the test increases. At the technical activity level, tests are very technical and full of clutter - it’s hard to see the forest for the trees. At the user interface workflow level, tests describe how something is done, which is easier to understand but still has too much detail to efficiently describe several possibilities. At the business rule level, the intention of the test is described in a relatively terse form. We can use that level to effectively communicate all different possibilities in important example cases. It is much more efficient to give another example as “Free delivery is not offered to customers who have one book” than to talk about logging in, putting only a single book in a cart, checking out etc. I’m not even going to mention how much cognitive overload a description of that same thing would require if we were to talk about clicking check-boxes and links.

Efficient to write

From the bottom up, the technical level of tests decreases. At the technical activity level, you need people who understand the design of a system, HTTP calls, DOM and such to write the test. To write tests at the user interface workflow level, you only need to understand the web site workflow. At the business rule level, you need to understand what the business rule is. Given a set of third-level components (eg login, adding a book), testers who are not automation specialists and business users can happily write the definition of second level steps. This allows them to engage more efficiently during development and reduce the automation load on developers.

More importantly, the business rule and the workflow level can be written before the UI is actually there. Tests at these levels can be written before the development starts, and be used as guidelines for development and as acceptance criteria to verify the output.

Relatively inexpensive to maintain

The business rule level isn’t tied to any particular web site design or activity flow, so it remains stable and unchanged during most web user interface changes, be it layout or workflow improvements. The user interface workflow level is tied to the activity workflow, so when the flow for a particular action changes we need to rewrite only that action. The technical level is tied to the layout of the pages, so when the layout changes we need to rewrite or re-record only the implementation of particular second-level steps affected by that (without changing the description of the test at the business or the workflow level).

To continue with the free delivery example from above, if the login form was suddenly changed not to have a button but an image, we only need to re-write the “login” action at the technical level. From my experience, it is the technical level where changes happen most frequently - layout, not the activity workflow. So by breaking up the implementation into this hierarchy, we’re creating several layers of insulation and limiting the propagation of changes. This reduces the cost of maintenance significantly.

Implementing this in practice

There are many good ways to implement this idea in practice. Most test automation tools provide one or two levels of indirection that can be used for this. In fact, this is why I think Cucumber found such a sweet spot for browser based user interface testing. With Cucumber, step definitions implemented in a programming language naturally sit with developers and this is where the technical activity level UI can be described. These step definition can then be reused to create scenarios (user interface workflow level), and scenario outlines can be used to efficiently describe tests at the business rule level.

New SLIM test runner for FitNesse provides similar levels of isolation. The bottom fixture layer sits naturally with the technical activity level. Scenario definitions can be used to describe workflows at the activity level. Scenario tables then present a nice, concise view at the business rule level.

Robot Framework uses “keywords” to describe tests, and allows us to define keywords either directly in code (which becomes the technical level) or by combining existing keywords (which becomes the workflow and business rule level).

The Page Object idea from Selenium and WebDriver is a good start, but stops short of finishing the job. It requires us to encapsulate the technical activity level into higher level “page” functionality. These can then be used to describe business workflows. It lacks the consolidation of workflows into the top business rule level — so make sure to do create this level yourself in the code. (Antony Marcano also raised a valid point that users think about business activities, not page functionality during CITCON Europe 09, so page objects might not be the best way to go anyway).

TextTest works with xUseCase recorders, an interesting twist on this concept that allows you to record the technical level of step definitions without having to program it manually. This might be interesting for thick-client UIs where automation scripts are not as developed as in the web browser space.

With Twist, you can record the technical level and it will create fixture definitions for you. Instead of using that directly in the test, you can use “abstract concepts” to combine steps into workflow activities and then use that for business level testing. Or you can add fixture methods to produce workflow activities in code.

Beware of programming in text

Looking at UI tests at these three levels is I think generally a good practice. Responsibility for automation at the user interface level is something that each team needs to decide depending on their circumstances.

Implementing the workflow level in plain text test scripts (Robot Framework higher level keywords, Twist abstract concepts, SLIM scenario tables) allows business people and testers who aren’t automation specialists to write and maintain them. For some teams, this is a nice benefit because developers can then focus on other things and testers can engage earlier. That does mean, however, that there is no automated refactoring, syntax checking or anything like that at the user interface automation level.

Implementing the workflow level in code enables better integration and reuse, also giving you the possibility of implementing things below the UI when that is easier, without disrupting the higher level descriptions. It does, however, require people with programming knowledge to automate that level.

An interesting approach that one team I interviewed had is to train testers to write code enough to be able to implement the user activity level in code as well. This doesn’t require advanced programming knowledge, and developers are there anyway to help if someone gets stuck.

Things to remember

To avoid shooting yourself in the foot with UI tests, remember these things:

- Think about UI test automation at three levels: business rules, user interface workflow and technical activity

- Even if the user interface workflow automation gets implemented in plain text, make sure to put one level of abstraction above it and describe business rules directly. Don’t describe rules as workflows (unless they genuinely deal with workflow decisions - and even then it’s often good to describe individual decisions as state machines).

- Even if the user interface workflow automation gets implemented in code, make sure to separate technical activities required to fulfil a step into a separate layer. Reuse these step definitions to get stability and easy maintenance later.

- Beware of programming in plain text.