A lot of my consulting work lately has been around helping teams see software quality more holistically - that it’s not something only testers (or only developers) should be concerned about. Doing that I’ve started formulating an idea that isn’t fully baked yet - but it helped me explain things better - and I’d love your comments on this.

A lot of the confusion about software quality is that it means different things to different people, and the best definitions so far are zen-like, eg Weinberg’s ‘value to someone (who matters)’. They don’t describe things like technical code quality, which I intuitively know matters but doesn’t directly provide value.

Laking a holistic definition of quality, teams measure things like bug trends, code coverage etc. What gets measured gets optimised, so we end up overly optimising things beyond the point that it makes sense. Air and food are a necessity, but more air and food than we need do not really improve the quality of life. Similar to that, technical correctness and performance are necessary but going beyond a certain point gives us diminishing returns. As with any local optimisation, there is a potential that we can hurt the whole pipeline by working on the wrong thing. As I was explaining this comparison to a client, it hit me that it might be worth trying to build a parallel between Abraham Maslow’s hierarchy of needs and software quality.

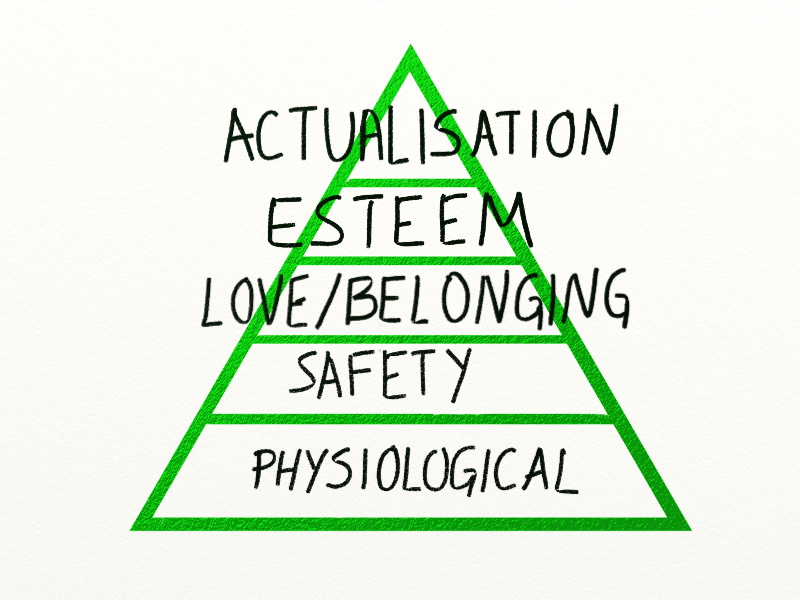

The famous Maslow’s pyramid lists human needs as a stack from physiological - necessary for basic functions (such as food, water), safety (personal security, health, financial security), love and belonging (friendship, intimacy), esteem (competence, respect) to self-actualisation (fulfilling potential). The premise of the hierarchy of needs is that when a lower level need is lacking, we disregard higher level needs. For example, when a person doesn’t have enough food, intimacy and respect, food is the most pressing thing. Another premise is that satisfying needs on the lower levels of the pyramid bring diminishing returns after some point. Our quality of life improves by satisfying higher level needs more. Eating more food than I really need brings obesity. More airport security than needed becomes a hassle. The key idea of the pyramid model is that once the basics are satisfied, we should work towards satisfying higher level goals.

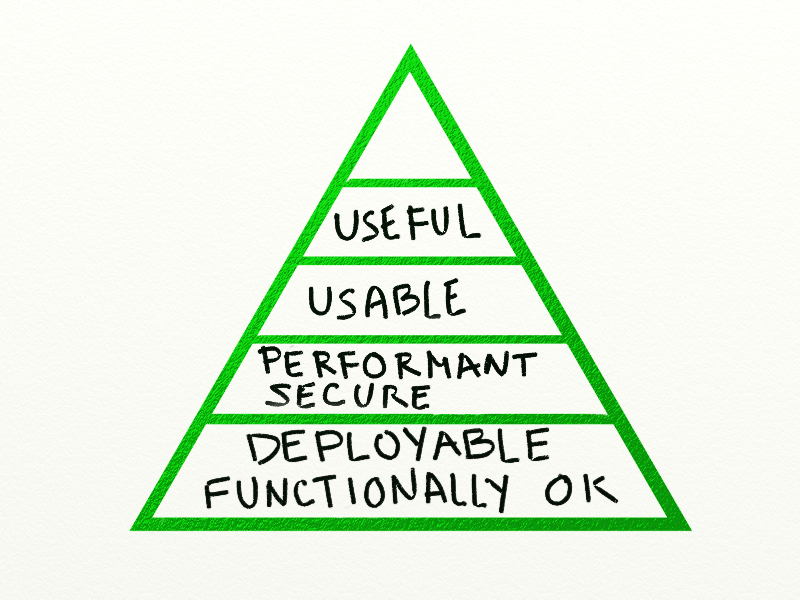

Maybe software quality isn’t as simple as a zen-like sentence, and maybe measuring bugs actually does provide some value. Maybe we should stop trying to make it simple and instead model quality on different levels. I tried modelling this with a client - and this is their particular case - but it might apply to others as well.

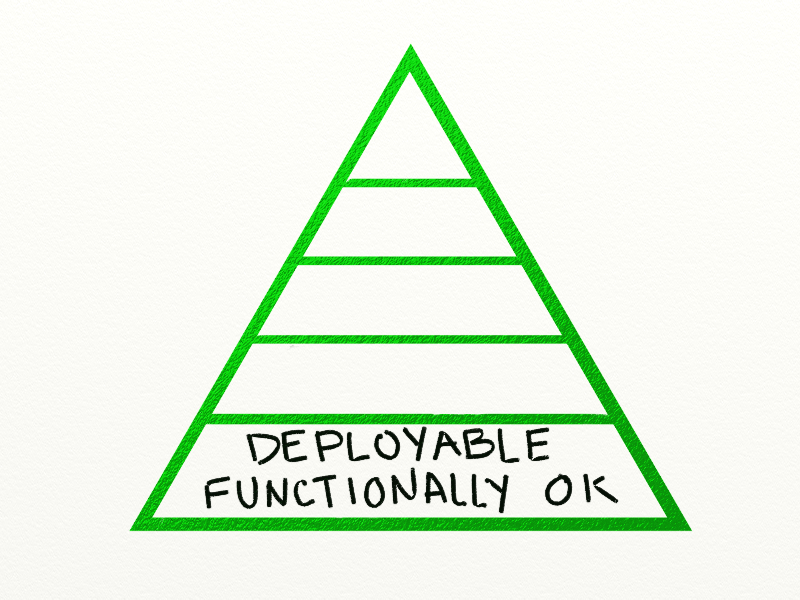

The lowest level of quality are physiological needs - if these things aren’t satisfied, software is completely useless. For this particular situation, we identified two things: that the software has to be deployable and it has to satisfy the minimum functionality so that “it works”. Activities such as TDD, functional testing (automated + manual), post-deployment testing and similar help us prove that this category of needs is satisfied. Measuring bug counts, code coverage and so on also works on this level. And similar to the human physiological needs, enough is enough and after some point there is no more value to be gained. Newer versions of Microsoft Office have thousands of features that nobody ever uses, because even Word 95 had enough. Investing in more features, developing, testing, maintaining them, is an overkill.

The lowest level of quality are physiological needs - if these things aren’t satisfied, software is completely useless. For this particular situation, we identified two things: that the software has to be deployable and it has to satisfy the minimum functionality so that “it works”. Activities such as TDD, functional testing (automated + manual), post-deployment testing and similar help us prove that this category of needs is satisfied. Measuring bug counts, code coverage and so on also works on this level. And similar to the human physiological needs, enough is enough and after some point there is no more value to be gained. Newer versions of Microsoft Office have thousands of features that nobody ever uses, because even Word 95 had enough. Investing in more features, developing, testing, maintaining them, is an overkill.

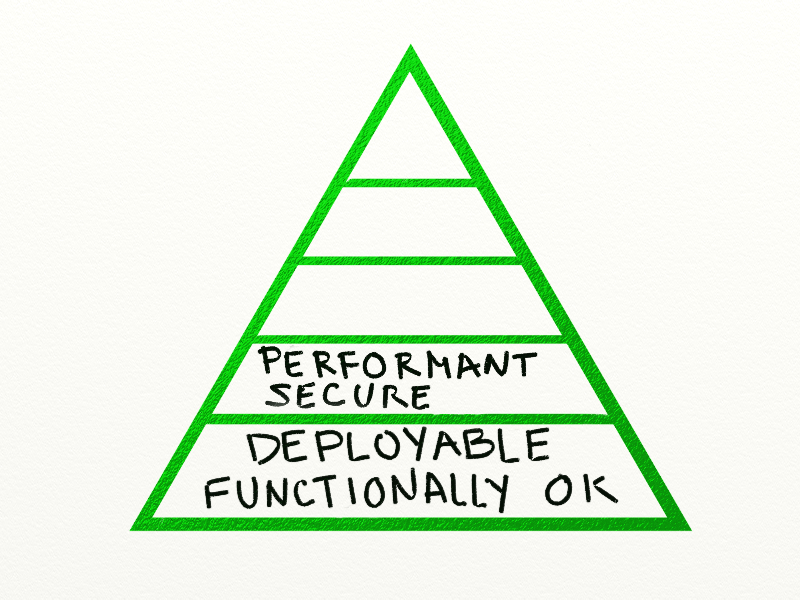

Once the software “works”, the second thing we need from it is to “work well”. Looking at parallels between human security needs and what we need from software, this is where a lot of what people typically call non-functionals goes in. Performance, reliability, security etc. Activities such as architectural design and performance optimisations come into this level, and performance testing, penetration analysis, stress tests etc might prove that we have satisfied enough. And similar to human security needs, enough is enough. Recent examples of Apple Itunes store security questions demonstrate that more features than needed in this space piss people off, or waste money. Building a system that can handle millions of concurrent users when in the next year or so we’ll only have thousands is a horrible waste of time. I lost a lot of my own money on this a few years ago - we goldplated a system architecturally instead of shipping more stuff that makes money.

Once the software “works”, the second thing we need from it is to “work well”. Looking at parallels between human security needs and what we need from software, this is where a lot of what people typically call non-functionals goes in. Performance, reliability, security etc. Activities such as architectural design and performance optimisations come into this level, and performance testing, penetration analysis, stress tests etc might prove that we have satisfied enough. And similar to human security needs, enough is enough. Recent examples of Apple Itunes store security questions demonstrate that more features than needed in this space piss people off, or waste money. Building a system that can handle millions of concurrent users when in the next year or so we’ll only have thousands is a horrible waste of time. I lost a lot of my own money on this a few years ago - we goldplated a system architecturally instead of shipping more stuff that makes money.

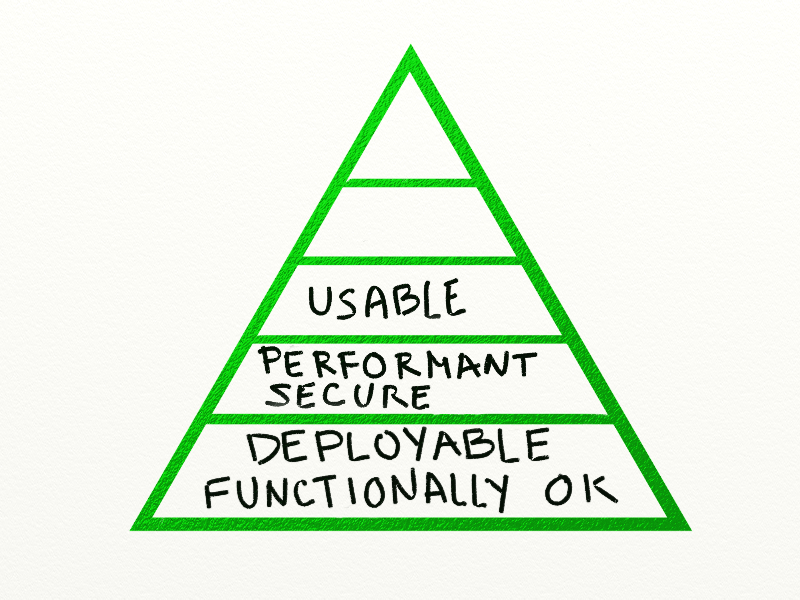

Providing the software performs well and is secure enough, the next level above is love and belonging - and now we cross over to the users of the software. Case in point is Twitter. Famous for its fail whale, twitter’s second-level qualities are often just good enough, but that hasn’t stopped them from building a huge community of loyal users. Activities such as user interaction design, graphical design, community engagement and similar support software in fulfilling the needs on the level of love/belonging. Usability testing proves it. Of course, different types of software need different levels of this. In-house admin software requires a lot less love because people are forced to use it. On the other hand, user devotion and interaction can make or break consumer products.

Providing the software performs well and is secure enough, the next level above is love and belonging - and now we cross over to the users of the software. Case in point is Twitter. Famous for its fail whale, twitter’s second-level qualities are often just good enough, but that hasn’t stopped them from building a huge community of loyal users. Activities such as user interaction design, graphical design, community engagement and similar support software in fulfilling the needs on the level of love/belonging. Usability testing proves it. Of course, different types of software need different levels of this. In-house admin software requires a lot less love because people are forced to use it. On the other hand, user devotion and interaction can make or break consumer products.

So far this is nothing revolutionary. If I look back at most of my client engagements, these three levels are where most of the investment in specifications, development and testing was, and roughly proportional to the levels as well. Most of the investment goes in building in functionality and testing it. Performance and things like that are sometimes planned for, often reactively built in, and tested less frequently. I rarely worked with teams that were serious about usability and invested a lot of time or money designing for it and testing it. The pyramid model told this concrete client that I worked with that maybe they should start shifting their investments a bit. But the real surprise came out when we started looking at the top two levels.

Usability on its own can be an overkill. No doubt about it. In Change by Design: How Design Thinking Transforms Organizations and Inspires Innovation, Tim Brown presents Nokia Ovi as a key success story to showcase design thiking.

Barely a year later, Nokia announced Ovi, a new service offering that could be accessed through any of its multimedia devices. Design thinking had enabled Nokia not only to explore new possibilities but also to convince itself that these possibilities were sufficiently compelling to move away from its strongly entrenched and previously successful approach. The timing was right. Today Ovi is one of the operating business divisions of the company, and Nokia - a technology leader - has reinvented itself as a service provider.

Oh, the irony. If ever there was a IT equivalent of harakiri, this was it. Nokia - a technology leader - has “reinvented itself as a service provider” but nobody wants their service. Don’t get me wrong, I am not saying that user interaction design is bad, or that design thinking is wrong - just that it’s not the end. It is a need to be satisfied, but brings diminishing returns after a point. There are two more levels on the pyramid above it.

The key thing missing from the Nokia Ovi story is the fulfilment of the potential. Usability marks potential, and if nobody is using it do we care? The level above usability is usefulness. I did a study with a client recently and looking at the log files we determined that roughly 60% of the features aren’t used enough to justify investment in further maintenance. Maybe instead of investing a lot of money in functional testing we can invest in measuring the usefulness of software? One team I interviewed for

Specification by Example: How Successful Teams Deliver the Right Software use automated tests as a target for development, but then disable most tests after they pass the first time. Instead, they protect against problems by delivering in small batches and measuring production use. They define quality more at the fourth level than the first level, which enables them to be much more productive. If the indicators expected by the business users aren’t there, the feature is taken out. Think of this as a business-bug.

The key thing missing from the Nokia Ovi story is the fulfilment of the potential. Usability marks potential, and if nobody is using it do we care? The level above usability is usefulness. I did a study with a client recently and looking at the log files we determined that roughly 60% of the features aren’t used enough to justify investment in further maintenance. Maybe instead of investing a lot of money in functional testing we can invest in measuring the usefulness of software? One team I interviewed for

Specification by Example: How Successful Teams Deliver the Right Software use automated tests as a target for development, but then disable most tests after they pass the first time. Instead, they protect against problems by delivering in small batches and measuring production use. They define quality more at the fourth level than the first level, which enables them to be much more productive. If the indicators expected by the business users aren’t there, the feature is taken out. Think of this as a business-bug.

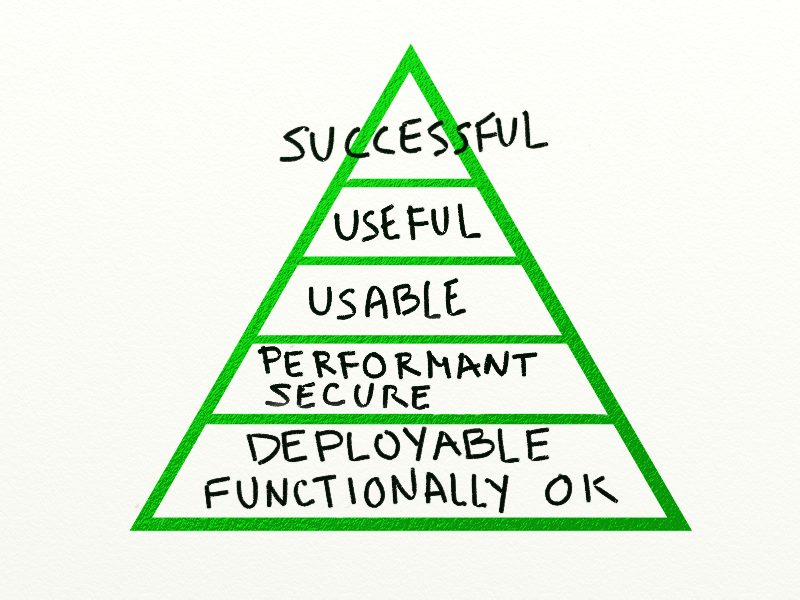

Finally, the fact that someone uses a feature doesn’t necessarily mean that the feature was right for the business in the first place. There is one more level above - corresponding to self-actualisation. Does the software achieve what it was originally intended to? Does it save money, earn money or protect money? Or whatever the key business goals were originally. If not, then it doesn’t really matter that people use it, that it is usable or performant or that all unit tests pass. This is the space where activities such as Impact Mapping and Feature Injection come in, along with the ideas of Build-Measure-Learn cycles and Actionable Metrics from The Lean Startup to prove them.

Finally, the fact that someone uses a feature doesn’t necessarily mean that the feature was right for the business in the first place. There is one more level above - corresponding to self-actualisation. Does the software achieve what it was originally intended to? Does it save money, earn money or protect money? Or whatever the key business goals were originally. If not, then it doesn’t really matter that people use it, that it is usable or performant or that all unit tests pass. This is the space where activities such as Impact Mapping and Feature Injection come in, along with the ideas of Build-Measure-Learn cycles and Actionable Metrics from The Lean Startup to prove them.

The top level is really where “more is better”, with perhaps a gradual transition to “good enough is good enough” on the two levels below, with the lowest two levels definitely falling into the “good enough” category. Yet from what I see most software teams invest, build and test only at the lowest two levels, gold-plating things without a way to explain why that is bad. Breaking things down in a visual model such as the one with the five levels of the pyramid here helped me get one team to start thinking better about what they really want to achieve. Your turn next!