Every single team I worked with over the last few years complained that they didn’t have enough time. Bullshit. People say the industry is like that, software teams are just under too much pressure. Bullshit again. People just don’t want to see what they don’t want to see.

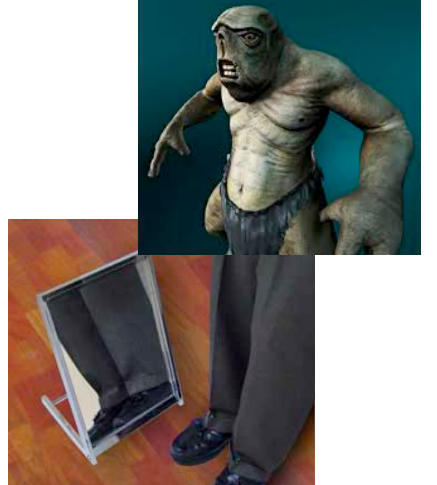

Nick’s shoes

When I was in high-school, a group of us was going out together. Before mobile phones, you couldn’t really call someone to shout at them if they’re late. And my friend Nick was always the last person to arrive, often more than half an hour after anyone else. The reason: he was choosing shoes. Yes, it’s a stereotype that girls spend longer choosing what they’ll wear, but Nick was the world champion in this game. He had a small mirror to check out shoes and could go uninterrupted forever deciding which shade of black to wear tonight. If he only had the right pair of shoes, he’d get lucky tonight, or that’s what he thought. But he never did. The reason is simple: he was as ugly as a cave troll. The choice of shoes didn’t really matter.

This is exactly how most teams approach process improvement. We point a very small mirror to a piece of the pipeline that we like, and optimise the hell out of it. But we ignore that big ugly troll face that really hurts.

Don’t trust me? Forrester research published a report summarising how most companies actually adopt agile a few months ago - the title says everything: Water-Scrum-Fall. Big boys spend months deliberating over all the important decisions, then children can go and play their scrums, and then the software will marinate in staging for a while until the big boys are happy again. And teams in the middle of this insanity argue over continuous integration, craftsmanship, TDD and other kinds of shoes that don’t really make a difference. The big ugly troll face is still there.

Many problems mask themselves as “not enough time”. Not enough time to test properly, not enough time to automate testing, not enough time to clean up code, not enough time to investigate issues or coordinate on what we’ll do. Bullshit. “Not enough time” is a pessimistic statement - we can’t do anything about it. Time flows at the same speed regardless of our intentions or actions. We can restate the problem into something more useful - “too much work”. Now that’s something that can be solved nicely.

It comes down to three steps:

- kill software already made that isn’t needed.

- kill software in making that won’t be needed

- kill software that was not successful

Kill software already made that isn’t needed

A common problem I see with teams is that a horrible amount of time is spent on maintaining software in proportion to complexity. A screen with five buttons will have five times more tests than a screen with one button, regardless of importance. A class with 500 lines will have more developer attention than a class with 50 lines, regardless of which one is used more.

One of my clients recently ran an analysis on their log files to check what is actually being used. Their software was built over 10 years with lots of experimentation from the business, but code was never really taken out. The result: roughly 70% of their features were not being used today. Improving the test automation design or testing process was like Nick choosing shoes. The big ugly troll face was that 70% of the system is waste. There was no point in maintaining 70% of those brittle tests or code. No user would see the difference if they were gone, and the team would have much more time to deal with the rest properly.

The Attribute Component Capability matrix described in How Google Tests Software is a good technique to analyse the risk and importance of existing software, in particularly to see where people invest time in testing. I’ve used it to drop 90% of test cases with a client without significantly increasing risk.

The purpose alignment model described in Stand Back and Deliver is another good heuristic to do further analysis and decommission entire subsystems that aren’t really adding a lot of value.

Kill software in making that won’t be needed

Very few teams I worked with over the last few years actually know why a certain piece of software is being built. Yes, there are user stories, or at least text in a common user story format, but the value statement is often too vague and generic to be useful. Look at your user stories - can you use the value statement to compare stories against each other? How much value will a story bring, over what time? What are the key assumptions?

Measurability is the key thing there. For example, “more users” is not a good goal. The solution to deliver 50% more users over a year is probably completely different than the one that increases user numbers by 200% over two months. Once we have an agreement about how much and over what time, it becomes much easier to argue if we can deliver it. Thinking about measurements helped me to stop death march projects before they even started, align everyone’s expectations and redefine projects into something that is precise and deliverable.

By reviewing the key assumptions and narrowing down the scope of the next milestone to a single set of measurable business indicators, one of my clients removed roughly 80% of the planned scope. That was the ugly troll face - 80% of the planned system was scope creep. It doesn’t matter how well we implement or test that 80%, it’s still waste.

The purpose alignment model is again a good tool to analyse this, as are Impact Maps.

Kill software that was not successful

With hindsight, everything seems logical and easy. But when we decide to build something, we don’t really know if it will work out the way we expect. Software projects are based on assumptions. Before we build stuff, we should know what those assumptions are, so that we can check if the things worked out as expected.

Even when the value statement of a piece of software is clear (which itself is rare), very few teams actually measure the outcome at the end. We don’t measure if a story really delivers value, so we don’t know if it did it at all. So even when a story fails to deliver the value, teams still keep the code and keep maintaining it. But that code is pure waste.

There is a big difference between code that is used and code that is successful (see my post about five levels of software quality) and the stuff that was not successful should be killed. Yet we don’t do that - because we measure inputs and not outcomes. Teams track velocity as story points, number of items implemented. Instead, we should be tracking value delivered. That is the real velocity. That is the real outcome.

Any software change should move the value in the right direction. Any improvement to the software delivery process should speed up the rate with which we deliver value, not the rate with which we burn effort. For as long as we only track inputs - effort, story points, number of tasks - we’re essentially tracking time. Improved capacity for effort leads to more work, and we’ll always run into the “too much work” problem. If we track value delivered, we can actually reduce work and deliver more value by stopping the wrong things. We can choose valuable work over less valuable stuff, and avoid “not enough time”.

The cases I presented are extreme, but imagine if you could drop 50% of unnecessary maintenance work and remove 50% of unnecessary planned work. The problems of not enough time to test properly, clean up code, analyse better and so on simply go away. Now go and look at that troll face and stop choosing shoes.