A more polished version of this article is in my book Fifty Quick Ideas To Improve Your User Stories

Many teams get stuck by using previous stories as documentation. They assign test cases, design diagrams or specifications to stories in digital task tracking tools. Such tasks become a reference for future regression testing plans or impact analysis. The problem with this approach is that it is unsustainable for even moderately complex products.

A user story will often change several functional aspects of a software system, and the same functionality will be impacted by many stories over a longer period of time. One story might put some feature in, another story might modify it later or take it out completely. In order to understand the current situation, someone has to discover all relevant stories, put them in reverse chronological order, find out about any potential conflicts and changes, and then come up with a complete picture.

Designs, specifications and tests explain how a system works currently - not how it changes over time. Using previous stories as a reference is similar to looking at a history of credit card purchases instead of the current balance to find out how much money is available. It is an error-prone, time consuming and labour intensive way of getting important information.

The reason why so many teams fall into this trap is that it isn’t immediately visible. Organising tests or specifications by stories makes perfect sense for work in progress, but not so much to explain things done in the past. It takes a few months of work until it really starts to hurt.

It is good to have a clear confirmation criteria for each story, but does not mean that test cases or specifications have to be organised by stories for eternity.

Divide work in progress and work already done, and manage specifications, tests and design documents differently for those two groups. Throw user stories away after they are done, tear up the cards, close the tickets, delete the related wiki pages. This way you won’t fall into the trap of having to manage documentation as a history of changes. Move the related tests and specifications over to a structure that captures the current behaviour organised from a functional perspective.

Key Benefits

Specifications and tests organised by functional areas describe the current behaviour without the need for a reader to understand the entire history. This will save a lot of time in future analysis and testing, because it will be faster and less error prone to discover the right information.

If your team is doing any kind of automated testing, those tests are likely already structured according to the current system behaviour and not a history of changes. Managing the remaining specifications and tests according to a similar structure can help avoid a split-brain syndrome where different people work from different sources of truth.

How to make this work

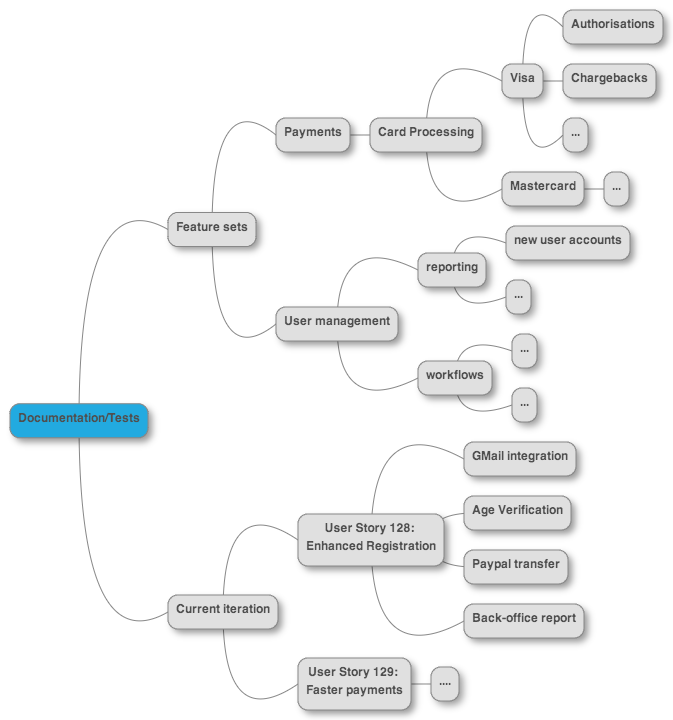

Some teams explicitly divide tests and specifications for work in progress and existing functionality. This allows them to organise information differently for different purposes. I often group tests for work in progress first by the relevant story, and then by functionality. I group tests for existing functionality by feature areas, then functionality. For example, if an enhanced registration story involves users logging in with their Google accounts and making a quick payment through Paypal, those two aspects of a story would be captured by two different tests, grouped under the story in a hierarchy. This allows us to divide work and assign different parts of a story to different people, but also ensure that we have an overall way of deciding when a story is done. After delivery, I would move the Paypal payment test to the Payments functional area, and merge with any previous Paypal-related tests. The Google mail integration tests would go to the User Management functional area, under the registration sub-hierarchy. This allows me to quickly discover how any payment mechanism works, regardless of how many user stories were involved in delivery.

Other teams keep tests and specifications only organised by functional areas, and use tags to mark items in progress or related to a particular story. They would search by tag to identify all tests related to a story, and configure automated testing tools to execute only tests with a particular story tag, or only tests without the work-in-progress tag. This approach needs less restructuring after a story is done, but requires better tooling support.

From a testing perspective, the existing feature sets hierarchy captures regression tests, the work in progress hierarchy captures acceptance tests. From a documentation perspective, the existing feature sets is documentation ‘as-is’, and the work in progress hierarchy is documentation ‘to-be’. Organising tests and specifications in this way allows teams to define different testing policies. For example, if a test in the feature sets area fails, we sound the alarm and break the current build. On the other hand, if a test in the current iteration area fails, that’s expected - we’re still building that feature. We are only really interested in the whole group of tests under a story passing for the first time.

Some test management tools are great for automation but not so good in publishing information so it can be easily discovered. If you use such a tool, it might be useful to create a visual navigation tool, such as a feature map. Feature maps are hierarchical mind maps of functionality with hyperlinks to relevant documents at each map node. They can help people quickly decide where to put related tests and specifications after a story is done and produce a consistent structure.

Some teams need to keep a history of changes, for regulatory or auditing purposes. In such cases, adding test plans and documentation to the same version control system as the underlying code is a far more powerful approach than keeping that information in a task tracking tool. Version control systems will automatically track who changed what and when. They will also enable you to ensure that the specifications and tests follow the code whenever you create a separate branch or a version for a specific client.