JavaScript may not be primary choice for most teams, but it is likely a necessary evil for the majority of people in our industry. StackOverlow puts it as the most popular programming language for five years running. A decade ago, testing JavaScript components was mostly an afterthought, delegated for manual work. Today, it is no longer the fringe platform for spinning web widgets, but a core piece of business workflows. This means that most teams need to take testing their JavaScript components quite seriously.

I recently ran a survey to learn more about developer preferences and experiences with JavaScript testing. The tyranny of choice, typical for the whole ecosystem, was quite obvious. With 683 survey responses, there were more than fifty distinct entries for test automation frameworks. With such a smorgasbord, nobody has the time to investigate all choices. Yet, such a big menu also begs the question whether there’s something much better out there than the tools we’re using at the moment. So how should people choose the right tools for an ecosystem that changes so frequently?

The whole platform is moving at such a pace that tools became obsolete within a few years unless an active community supports them. Given that JavaScript tools and frameworks have a lifetime expectancy shorter than Dragonflies, a safe choice for a tool would be something with an active community. Theoretically, this is reflected in popularity ratings. Popular tools make it significantly easier to transfer knowledge and involve other people into development, both for hiring for commercial projects and for getting people to participate in opensource work. But popularity on its own isn’t the best criteria. Because of the brutal Darwinism of JavaScript tools, older tools that survive longer are likely to be significantly more popular than the rest. At the same time, the ecosystem evolves quickly, so newer tools constantly emerge trying to cover more modern use cases and recent platform changes.

I asked people in the survey to rate their experience with the tools. Some questions looked at the process benefits, such the confidence to release frequently and preventing bugs. Other questions inquired into tool usability, how easily developers work with their tools, and how easily they can maintain and understand test cases. The combined scores for those groups of questions give a nice indication about how happy teams are with the chosen tools. Of course, the process benefits depend more on the contents of the test cases than the tools themselves, but a big difference in the benefits rating could point out that the tool is not suitable for some key workflow.

From that viewpoint, a good choice for a tool would be something that:

- Makes it easy for others to get involved, and has a low risk of disappearing quickly (so it has a reasonable popularity, not necessarily #1).

- Does not lack any major features, and supports key modern platform needs (so the process benefit ratings are in line with the top tools).

- Makes it easy to write, understand and maintain test cases (best developer usability of tools passing the first two criteria).

A quick note on accuracy

Before we get into the numbers, here’s a quick note on data accuracy. Based on the 683 responses, and the latest estimate for the number of developers worldwide, the margin of error for the survey results is 4% with a 95% confidence level. Also, note that the benefits and usability questions were formulated so that people rated their entire setup, not an individual tool, which is important where a combination of tools comes into play.

The original research also contained questions on related tools such as browser runners and continuous integration platforms. If you’d like to dig deeper into the data or see the answers to other questions, download the full results.

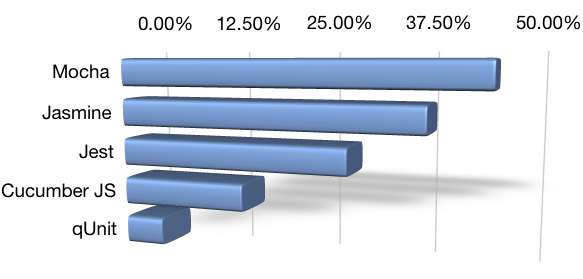

What are the most popular tools?

Just looking at the popularity, three tools clearly stand out. The first two will be no surprise for anyone involved in the community more than a few years, but the bronze medal was quite surprising for me:

Here is how the users rated their experiences with the top three tools:

| Tool | Responses | Process rating | Usability rating |

|---|---|---|---|

| Mocha | 45% | 69% | 62% |

| Jasmine | 38% | 66% | 59% |

| Jest | 29% | 67% | 63% |

Mocha wins slightly in terms of the positive effects on the development process, but the other tools are following closely, meaning there’s likely no major issue with any of the top three for key modern workflows. Jest leads in developer usability, insignificantly over Mocha, but significantly over Jasmine. All the other tools are significantly less popular.

Careful readers will notice that the percentages add up to more than 100%, because many teams use more than one tool. (There will be more on this later, with a very interesting punchline.) On a flip side, roughly 12% of the survey respondents do not use any automated testing tools for their JavaScript code!

Removing responses where teams used more than one tool, the rankings stay the same:

| Tool | Responses | Process rating | Usability rating |

|---|---|---|---|

| Mocha | 16% | 69% | 64% |

| Jasmine | 15% | 64% | 59% |

| Jest | 10% | 64% | 64% |

The recent popularity of Jest is probably caused by the meteoric rise of React, as they both come from the same source. In the survey, about 80% of people using Jest also use React.

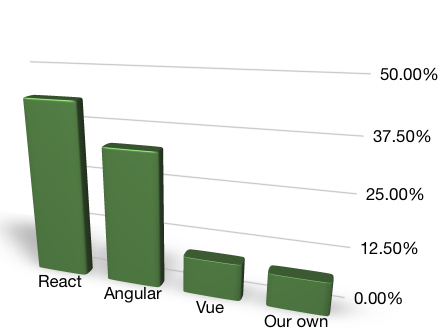

Of course, the type of software you work on makes a big difference for the choice of the testing toolkit. In the JavaScript space, this often closely relates to a front-end framework. Here is the list of frameworks used by the survey respondents.

Here’s how the numbers change when focused on a few popular application types:

React

Roughly 43% of the respondents use React in their work. Jest and Mocha seem to be similarly popular for this use case, but Jest leads in developer usability for testing React applications.

| Tool | Responses | Process rating | Usability rating |

|---|---|---|---|

| Jest | 24% | 67% | 65% |

| Mocha | 24% | 70% | 63% |

| Jasmine | 13% | 67% | 60% |

Angular

Roughly 34% of the respondents use some version of Angular. In the Angular application development world, Jest drops off the radar and another tool pops up into the third place: Cucumber JS. Jasmine is by far the most popular choice. People using Cucumber seem to have higher ratings for their test setup, but the overall differences here are inconclusive, so I’d need more data before making any big recommendations.

| Tool | Responses | Process rating | Usability rating |

|---|---|---|---|

| Jasmine | 21% | 66% | 59% |

| Mocha | 14% | 66% | 58% |

| Cucumber JS | 6% | 67% | 62% |

Back-end applications

Roughly 20% of the respondents work only on back-end applications. Unfortunately, the numbers here start getting a bit untrustworthy, but Mocha seems to be significantly more popular than the rest, and better rated. I would, however, like to collect more responses for this case before making any conclusions. Maybe you can help?

| Tool | Responses | Process rating | Usability rating |

|---|---|---|---|

| Mocha | 7% | 72% | 63% |

| Jasmine | 3% | 64% | 59% |

| Jest | 2% | 65% | 54% |

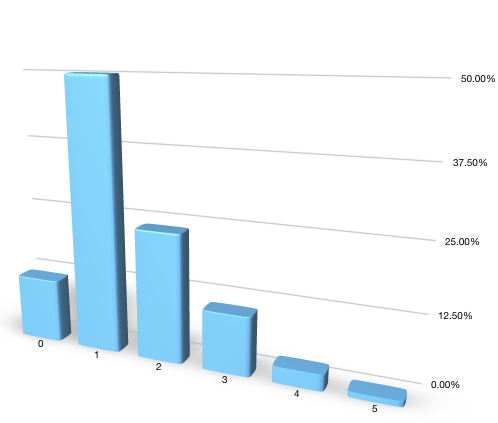

Tool combinations

As I mentioned before, the numbers add up to more than 100% because many teams used more than one tool. In fact, only about a half of the teams used a single tool.

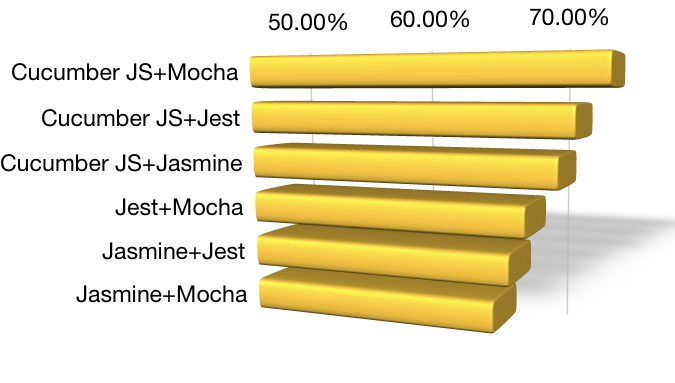

Looking at the popular combinations, I was quite surprised with the results!

| Tools | Responses | Process rating | Usability rating |

|---|---|---|---|

| Jasmine + Mocha | 18% | 67% | 59% |

| Jest + Mocha | 14% | 69% | 61% |

| Jasmine + Jest | 10% | 68% | 59% |

| Cucumber JS + Mocha | 7% | 74% | 65% |

| Cucumber JS + Jasmine | 6% | 71% | 64% |

| Cucumber JS + Jest | 4% | 72% | 70% |

The most popular combination seems to be Jasmine and Mocha, which is very curious as they both tend to solve the same problem. I can only assume that this is because people used one tool early on and switched to another one, so different parts are covered by different tools. Another option, based on the previous observations about back-end testing and Angular, would be that people use Mocha on the back-end code and Jasmine for front-end code. However, that’s not the really interesting part of the tool combination table.

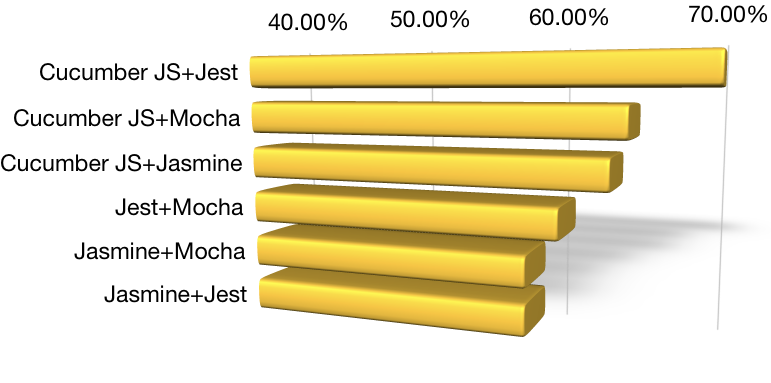

Check out the pattern emerging when ordering by benefits:

Although Cucumber JS on its own isn’t popular enough to get to the top 3 choices on the list, combining Cucumber with one of the more popular tools seems to make a big difference for bug prevention and deployment confidence. Cucumber and Mocha together scored 74% on the benefits scale, higher than any other tool in isolation, or any other combination.

A similar pattern emerges when ordering by usability:

Cucumber and Jest as a combination seem to win by a huge margin in terms of making developers happy about maintenance, writing and understanding automated tests. With a 70% score, this higher than any other isolated tool, or any other combination.

Conclusions

We don’t use React so I’ve not really paid attention to Jest before, but the results of this survey will make me think twice about the tool choice for the next project. Jest seems to be crossing over as a more generic tool, confirmed by the 20% of its users that do not use React. Although Jest is a relatively new kid on the block, given the corporate backing by Facebook, it’s likely to stay around for a long time.

The other interesting conclusion seems to be that combining Cucumber with any of the popular unit-testing tools increases the overall rating — quite significantly. I assume the explanation is because Cucumber tends to solve a different problem from Jasmine, Mocha or Jest. The three most popular tools are aimed at developers, where Cucumber is more geared towards cross-functional work and looking at quality from a slightly higher level. Extracting higher-level test cases from a developer-oriented tool likely makes both parts cleaner and easier to work with. Although most people (wrongly) equate Cucumber with browser-level user interface testing, it’s a decent tool for extracting business-readable scenarios that can be validated by domain experts, so this is a valid choice for back-end work as well.

My conclusion from this is that the combination of Jest and Cucumber seems to strike the best balance between process benefits and developer usability overall. If you’re starting a new project with a clean slate, that seems to be a good choice for the test framework, closely followed by Mocha and Cucumber.

Having said that, the numbers here are just above the margin of error, and I’d love to collect more data to validate this assumption. If you work with JavaScript and did not participate in the original survey, please help create a more complete picture by filling in a quick form.

Photo by Tim Graf on Unsplash