In order to get requirements, business analysts often work through a number of realistic examples with the customers, such as existing report forms or examining existing work processes. These examples are then translated to abstract requirements in the first step of the Telephone game. Developers extract knowledge from that and translate it into executable code, which is handed over to testers. Testers then take the specifications, extract knowledge from them and translate it into verification scripts, which are then applied to the code that was handed over to them by the developers. In theory, this works just fine and everyone is happy. In practice, this process is essentially flawed and leaves huge communication gaps at every step. Important ideas fall through those gaps and mysteriously disappear. After every translation, information gets distorted and misunderstood, leading to large mistakes once the ideas come through the other end of the pipe.

An interesting thing about examples is that they pop up several times later in the process as well. Abstract requirements and specifications leave a lot of space for ambiguity and misunderstanding. In order to verify or reject ideas about those requirements, developers often resort to examples and try to put things in a more concrete perspective when talking about edge cases to business experts or customers. Test scripts which are produced to verify the system are also examples of how the system behaves. They capture a very concrete workflow, with clearly defined inputs and expected outputs.

Concrete realistic examples give us a much better way to explain how things really work than requirements. Examples are simply a very effective communication technique and we use them all the time, this comes so natural that we are not often aware of it.

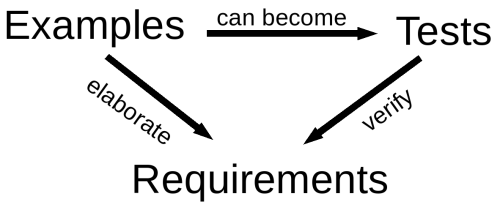

Trying to put things into a better perspective, the participants of the second Agile Alliance Functional Testing Tools workshop, held in August 2008 in Toronto, came up with this diagram explaining the relationship between examples, requirements and tests:

Examples, requirements and tests are essentially tied together in a loop.

This close link between requirements, tests and examples signals that they all effectively deal with related concepts. The problem is that every time examples show up on the timeline of a software project, people have to re-invent them. Customers and business analysts use one set of examples to discuss the system requirements. Developers come up with their own examples to talk to business analysts. Testers come up with their own examples to write test scripts. Because of the Telephone game, these examples might not describe the same things (and they often do not). Examples that developers invent are based on their understanding. Test scripts are derived from what testers think about the system.

Requirements are often driven from examples, and examples also end up as tests. With enough examples, we can build a full description of the future system: a specification by example. We can then discuss those examples to make sure that everyone understands them correctly. By formalising examples we can get strict requirements for the system and a good set of tests. If we use the same examples throughout the project, from discussions with customers and domain experts to testing, then developers or testers do not have to come up with their own examples in isolation. By consistently using the same set of examples we can eliminate the effects of the Telephone game.