Five years ago, Lisa Crispin and Janet Gregory brought testing kicking and screaming into agile, with their insanely influential Agile Testing book. They are now working on a follow-up. This got me thinking that it’s about time we remodelled one of our sacred cows: the Agile Testing Quadrants. Although Brian Marick introduced the quadrants a few years earlier, it is undoubtedly Crispin and Gregory that gave Agile Quadrants the wings. The Quadrants were the centre-piece of the book, the one thing everyone easily remembered. Now is the right time to forget them.

XP is primarily a methodology invented by developers for developers. Everything outside of development was boxed into the role of the XP Customer, which translates loosely from devspeak to plain English as “not my problem”. So it took a while for the other roles to start trying to fit in. Roughly ten years ago, companies at large started renaming business analysts to product owners and project managers to scrum masters, trying to put them into agile boxes. Testers, forever the poor cousins, were not an interesting target group for expensive certification. So they were left utterly confused about their role in the brave new world. For example, upon hearing that their company is adopting Scrum, the entire testing department of one of our clients quit within a week. Developers worldwide, including me, secretly hoped that they’ll be able to replace those pesky pedants from the basement with a few lines of JUnit. And for many people out there, Crispin and Gregory saved the day. As the community started re-learning that there is a lot more to quality than just unit testing, the Quadrants became my primary conversation tool to reduce confusion. I was regularly using that model to explain, in less than five minutes, that there is still a place for testers, and that only one of the four quadrants is really about rapid automation with unit testing tools. The Quadrants helped me facilitate many useful discussions on the big picture missing from typical developers’ view of quality, and helped many testers figure out what to focus on.

The Quadrants were an incredibly useful thinking model for 200x. However, I’m finding it increasingly difficult to fit the software world of 201x into the same model. With shorter iterations and continuous delivery, it’s difficult to draw the line between activities that support the team and those that critique the product. Why would performance tests not be aimed at supporting the team? Why are functional tests not critiquing the product? Why would exploratory tests be only for business stuff? Why is UAT separate from functional testing? I’m not sure if the original intention was to separate things into those during development and after development, but most people out there seem to think about the horizontal Quadrants axis in terms of time (there is nothing in the original picture that suggests that, although Marick talks about a “finished product”). This creates some unjustifiable conclusions - for example that exploratory testing has to happen after development. The axis also creates a separation that I always found difficult to justify, because critiquing the product can support the team quite effectively, if it is done timely. Taking that to the extreme, with lean startup methods, a lot of critiquing the product should happen before a single line of production code is written.

The Quadrants don’t fit well with the all the huge changes that happened in the last five years, including the surge in popularity of continuous delivery, devops, build-measure-learn, big-data analytics obsession of product managers, exploratory and context driven testing. Because of that, a lot of the stuff teams do now spans several quadrants. The more I try to map things that we do now, the more the picture looks like a crayon self-portrait that my three year old daughter drew on our living room wall.

The vertical axis of the Quadrants is still useful to me. Separation of business oriented tests and technology oriented tests is a great rule of thumb, as far as I’m concerned. But the horizontal axis is no longer relevant. Iterations are getting shorter, delivery is becoming more continuous, and a lot of the stuff is just merging across that line. For example, Specification by Example helps teams to completely merge functional tests and UAT into something that is continuously checked during development. Many teams I worked with recently run performance tests during development, primarily not to mess things up with frequent changes - more to support the team than anything else.

Dividing tests into those that support the team and those that evaluate the product is not really helping to facilitate useful discussions any more, so it’s time to break that model.

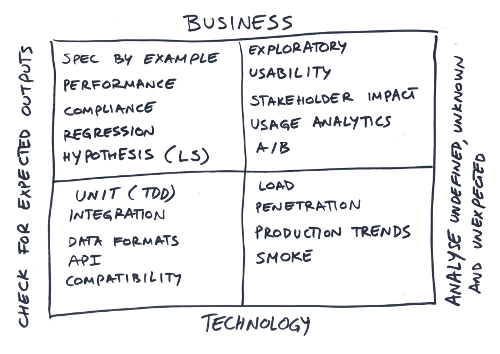

The context driven testing community argues very hard that looking for expected results isn’t really testing - instead they call that checking. Without getting into an argument what is or isn’t testing, the division was quite useful to me for many recent discussions with clients. Perhaps that is a more useful second axis for the model: the difference between looking for expected outcomes and analysing aspects without a definite yes/no answer, where results require skilful analytic interpretation. Most of the innovation these days seems to happen in the second part anyway. Checking for expected results, both from a technical and business perspective, is now pretty much a solved problem.

Thinking about checking expected vs analysing outcomes that weren’t pre-defined helps to explain several important issues:

- We can split security into penetration/investigations (not pre-defined) and a lot of functional tests around compliance such as encryption, data protection, authentication etc (essentially all checking for pre-defined expected results), debunking the stupid myth that security is “non-functional”.

- We can split performance into load tests (where will it break?) and running business scenarios to prove agreed SLAs and capacity, continuous delivery style, debunking the stupid myth that performance is a technical concern.

- We can have a nice box for ACC-matrix driven exploration of capabilities, as well as a meaningful discussion about having separate technical and business oriented exploratory tests.

- We can have a nice box for build-measure-learn product tests, and have a meaningful discussion on how those tests require a defined hypothesis, and how that is different from just pushing stuff out and seeing what happens through usage analytics.

- We can have a nice way of discussing production log trends as a way of continuously testing technical stuff that’s difficult to automate before deployment, but still useful to support the team. We can also have a nice way of differentiating those tests from business-oriented production usage analytics.

- We could avoid silly discussions on whether usability testing is there to support the team or evaluate the product.

Most importantly, by using that horizontal axis, we can raise awareness about a whole category of things that don’t fit into typical test plans or test reports, but are still incredibly valuable. The 200x quadrants were useful because they raised awareness about a whole category of things in the upper left corner that most teams weren’t really thinking of, but are now taken as common sense. The 201x quadrants can help us raise awareness about some more important issues for today.

That’s my current thinking about it. Perhaps the model can look similar to the picture below.