update (2009-01-05): a more polished version of this article is published in my new book, Bridging the Communication Gap as part of the “Implementing Agile Acceptance Testing” chapter!

The idea of the example-writing workshop to support acceptance testing seems to cause a lot of confusion and misunderstanding, at least judging from my two most recent talks and the questions during the discussion at the second Alt.NET UK conference. A lot of people seem to somehow contrast that to iterative development and mistake the workshop for big design up-front, expecting that it will increase the feedback loop. To resolve the misunderstanding, here is an example of how the workshop (and acceptance testing) fits into an agile process to shorten the feedback loop and improve iterative development.

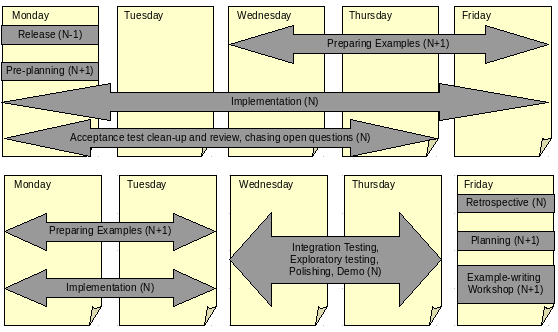

Agile acceptance testing, at least in the form in which I use it, does not say anything about how to develop software or organise iterations. There is nothing stopping us from fitting it into a two-week iteration. In fact, it will work best with short iterations. Short iterations enforce us to keep the stories which should be discussed in the workshop short, allowing us to keep the workshop short and focused. This is a simplified version of the iteration flow that Andy Hatoum and I came up with recently. Take this just as a guideline, not as something that has to be implemented exclusively that way. Hopefully it will make it easier to understand how to apply these ideas in different environments.

The brackets point out to the iteration that a step relates to. N is the current one, N-1 is the previous one and N+1 is the next iteration.

- Potential Release (N-1): We can release the result of the previous iteration on Monday (marked N-1). Not every iteration has to be shipped to customers, but we like to have the code as a potentially shippable software package at any point. We don't release on Fridays to reduce the risk of causing production problems over weekends, while people are not readily available to fix issues.

- Implementation (N): On Monday, the implementation of the current iteration (N) starts. This includes all normal agile development practices such as unit testing and continuous integration. An important change for teams already practising agile development is that the development is focused on implementing acceptance tests specified for the iteration. Implementation includes running and cleaning up acceptance tests, identifying and discussing missing cases, writing more tests for those cases or modifying existing tests. It also includes releasing to the QA environment often, so that on-site customer and testers can play with the software and provide quick feedback. Implementation continues until Wednesday next week.

- Pre-planning (N+1): The clients, the business analyst and the project manager and the development team leaders work out roughly what will go into the next iteration (not the current one, but the one after). This is intentionally a rough plan, not carved in stone, but good enough to give the business analyst and the customers a starting point for discussion and allow them to stay one step ahead. This happens on Monday as well.

- Acceptance test clean-up and review (N): Not all acceptance tests will be perfect straight away, so a business analyst chases open questions, gets remote domain specialists to review the tests and the PM can get any sort of sign-off required for tests. Acceptance tests can be simplified, cleaned up and organised better by testers, developers or business analysts. We expect this in practice to span the first few days of an iteration. Changes might be introduced into the acceptance tests later, but we expect the bulk of it to be done in the first few days and for the tests to stabilise after that. The diagram shows that this should end by Thursday, but that is not a specifically important deadline, it is more an estimate. Clean-up ends when it ends, sometimes it may not be needed at all, sometimes it will end sooner, sometimes it will spill over to the next week.

- Preparing examples (N+1): Once the bulk of acceptance test clean-up it is done, the business analyst can start working with the clients and the QA on the examples for the next iteration, preparing a good starting point for the workshop. The time-lines for this are again not enforced, so the diagram is a rough estimate. In general, after the work on the current iteration is done, the business analyst should focus on the next one and keep doing that until the polishing starts. The important thing is that these examples don't need to be absolutely complete at this point. The goal is to get started and prepare for the workshop, not to iron out all the acceptance tests.

- Integration testing, Exploratory testing, Polishing and Demo (N): The development should close by Tuesday in the second week of the iteration. At that point, we start polishing the result. We expect developers to run packaging and release to the internal QA environment as soon as there is something for others to see. That allows the on-site client and QA to check what developers are doing during the development and provide feedback, but those intermediate releases do not necessarily need to be production quality. At this point we start really focusing on producing a clean, polished package. This involves cleaning up database scripts, packages, configuration, testing the installation and upgrades from the previous version. Everyone switches from adding new features into exploratory testing and cleaning up the results. Issues, UI bugs and ideas for small improvements get resolved during during Wednesday and Thursday. By Thursday afternoon, we should have a polished version that we can demonstrate to off-site customers remotely. Polishing may spill over on to Friday, but that should be an exception rather than a rule.

- Retrospective (N): A normal retrospective, looking at the last two weeks of development and possibly a wider scope. Everyone is involved.

- Planning (N+1): Planning poker, estimating stories, selecting the scope for the next iteration. This becomes the official plan, and the results from the pre-planning session are used to start of the discussion. Everyone is involved.

- Example-writing workshop (N+1): After the scope has been selected, the workshop is organised to nail down the specifications and facilitate the transfer of knowledge. The aim of the workshop is to build a shared understanding among developers, business people and testers about the aims for the next two weeks of work. A more tangible result of the workshop are realistic examples which can be converted to acceptance tests. Selected stories are introduced by the business analyst, then we all go through the examples that the business analysts have prepared with the customers. The other workshop participants then ask questions and suggest edge cases for discussion. Developers need to think about how they will implement the stories and identify functional gaps and inconsistencies. Testers need to think about breaking the system and suggest important testing cases for discussion. We can organise feedback exercises during the workshop to check whether we all understand the same thing. Because realistic examples are discussed and written down, inconsistencies and gaps should be easy to identify at that stage and we will get a solid foundation for the development. The workshop ends when everyone involved agrees that there are enough examples and that everything is clear enough to start the work. Some questions may remain open, because they require further approval of a more senior stakeholder or an opinion of a particular domain expert, but in general the workshop should define the precise scope and ensure that we all agree on the same things. A representative set of examples is chosen to be an acceptance criteria at the end of the workshop, and formalised into acceptance tests.

After this, we start all over with the next iteration.

For an alternative view, see Lisa Crispin’s process description.