It’s now been ten years since I submitted the final manuscript of Specification by Example to the publisher. In the book, I documented how teams back then used examples to guide analysis, development and testing. During the last two months, I’ve been conducting a survey to discover what’s changed since the book came out. Some findings were encouraging, confirming that the most important problems from ten years ago have been solved. Some findings were quite surprising, pointing at trends that prevent many teams from getting most out of the process. Here are the results.

About the survey

I surveyed people who follow me on Twitter, LinkedIn and through various mailing lists and forums dedicated to Specification by Example. This means that the survey sample is biased towards teams that actually use this technique, rather than representing all software development. I collected a total of 514 responses, 339 of which used examples as a way of capturing acceptance criteria for development.

While reading the results, keep in mind that the sample is not representative of the whole industry. None of the numbers below imply what percent of overall software development includes Specification by Example. However, the results should be within 4-5% accuracy for teams that actually use examples to guide development.

Arnaud Bailly prompted me to run this research with interesting questions which I did not know how to answer. Christian Hassa, David Evans, Gáspár Nagy, Janet Gregory, Lisa Crispin, Matthias Rapp, Matt Wynne, Seb Rose and Tom Roden helped me make the conclusions much better and clearer by providing invaluable feedback. Thanks a million to all of you!

Relationship between product quality and using examples

In the survey, I asked people to roughly estimate the quality of their products by choosing one of the following categories:

- Users almost never experience problems in production (Great)

- Problems in production happen but they are not serious (Good)

- Users experience serious problems frequently (Poor)

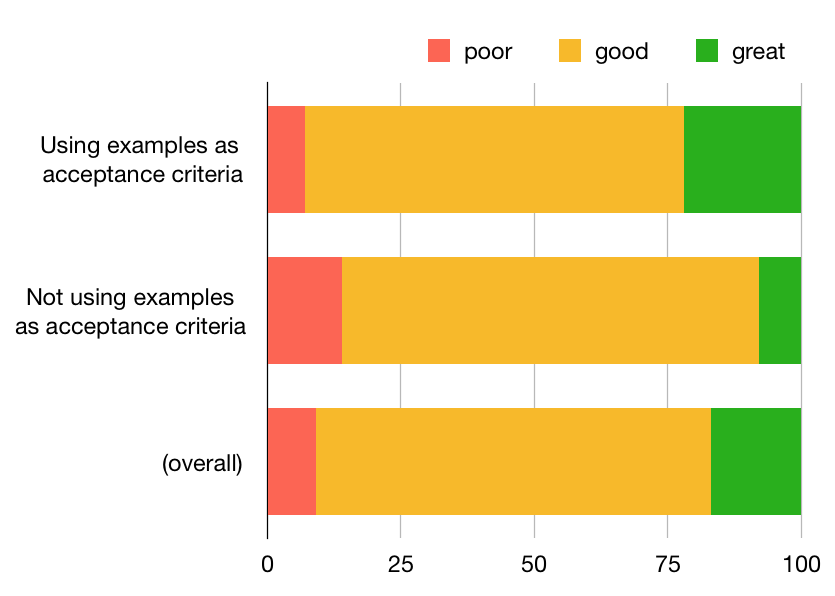

Combining that with the answers to the question “Do you use examples as acceptance criteria for your specifications and/or tests?”, we can get a rough estimate of the correlation of this technique with product quality.

| Rough estimate of product quality | Great | Good | Poor |

|---|---|---|---|

| Using examples for acceptance criteria | 22% | 71% | 7% |

| Not using examples for acceptance criteria | 8% | 78% | 14% |

| (overall) | 17% | 74% | 9% |

The results show that using examples for acceptance criteria is strongly correlated with higher product quality. Note that this is correlation, not necessarily causation. People that use examples for driving specifications and tests might take product quality more seriously and apply other techniques as well. To test this, I asked people whether they were using exploratory testing (a good general indicator of a culture of serious testing). Overall, 73% responded positively. Limiting to just people who use examples as acceptance criteria, this number increased to 81%. This suggests a strong correlation between examples as a way to drive development and taking product quality seriously.

Given-When-Then won, by a huge margin

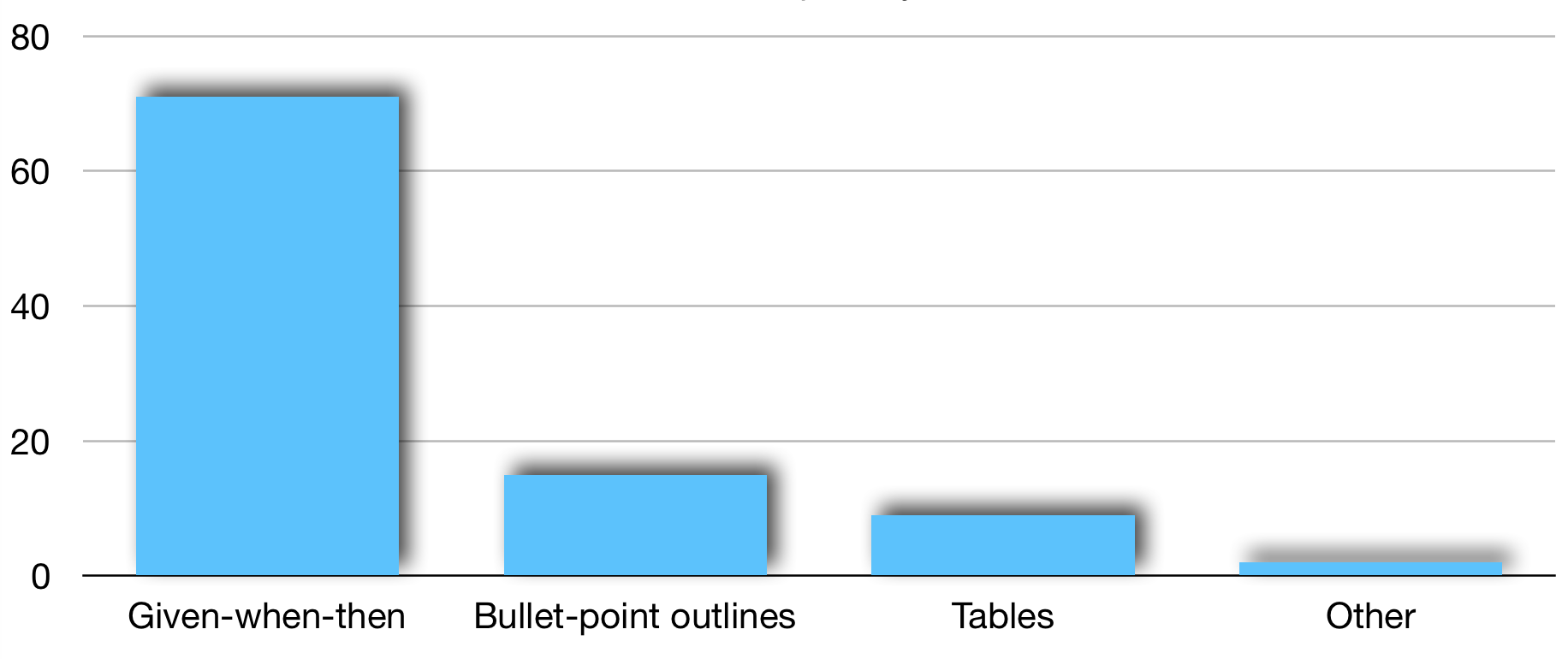

Ten years ago, there was no standard format for how examples should look. Today, the default option (used by 71% respondents) seems to be Given-When-Then.

| What format do you primarily use to capture the examples in your specifications/tests? | |

|---|---|

| Given-when-then | 71% |

| Bullet-point outlines | 15% |

| Tables | 9% |

| Other | 2% |

If you used examples to drive development way back in the eighties, your name was probably Ward Cunningham. Ward, of course, figured it out first, as he did with many other things that we now take for granted. Ward’s work on the WyCash+ project inspired many XP practices, including what became known as ‘Customer Tests’ in late nineties. There was no specific prescribed format for customer tests in XP Explained, the book that introduced that term to a wider audience. In the early days of agile development, the big challenge for adopting Customer Tests was to find some way of capturing information that can be used by all the various roles involved in delivering software. Since Ward’s original work was in financial planning, he used spreadsheets, which influenced the first tool for automating tests based on examples (FIT). FIT led to the first book dedicated to the topic, Fit for Developing Software, which came out in 2005 and further promoted tables as a way to capture examples. In mid-2000s, Spec by Example was still in the innovators segment of Geoffrey Moore’s technology adoption cycle, and there was a great deal of innovation. Rick Mugridge, Jim Shore and Mike Stockdale extended FIT in various ways to better suit their clients’ needs. Micah Martin and Robert C. Martin significantly improved usability of FIT and the various extensions for analysts and developers. They put a convenient wiki-based editor and web front on top of those tools, calling it FitNesse.

Tables were great for capturing examples in financial institutions, but did not work that well for workflows and less structured information. David Peterson experimented with free-form text for examples at Sky in the UK, building Concordion. At Nokia Siemens Networks in Finland, Pekka Clark experimented with introducing hierarchical keywords, which led to the Robot Framework. Dan North, Liz Keogh and Chris Matts came up with ‘Given-When-Then’ while working at ThoughtWorks in London, which led to JBehave, and later to a whole host of tools with the same syntax, which later became known as Gherkin. This includes the most popular tools today, such as Cucumber and SpecFlow.

Most teams participating in the research that led to the Specification by Example book used either FIT or FitNesse, and captured their examples with tables. Today tables account for less than 10% of the usage.

In this survey, I didn’t ask enough questions to conclude why the preferences changed so much, so it’s difficult to provide a definite explanation. My best guess is that Given-When-Then won due to a good balance between expressiveness and developer productivity. Tables tend to be great for groups of examples with repetitive structure, but they aren’t good for capturing flows or groups of examples with varying structure. Likewise, tables might be difficult to enter in tools that do not support fixed spacing (such as text fields in a task tracking system), but text in the Given-When-Then format can be easily typed up anywhere. This might not seem as important, but I think it’s actually key to the popularity of this way of capturing examples. More on this later, though.

Tools for automating examples from tables, such as FIT, tend to be simple. Tools that can work with more free-form descriptions, such as Concordion and Robot Framework, tend to require a lot more work. Given-When-Then is somewhere in the middle between the two. It provides enough structure to express different types of ideas, but it also makes it easy to automate tests. Starting from Cucumber onwards, tools that work with Given-When-Then could also incorporate tabular information, which is perhaps why the table-only tools became less popular.

Given-When-Then also provided just enough structure so that the specification of examples can live on its own, without being too tightly integrated with a single automation tool. From the early days of FIT until Cucumber, the format of examples and the corresponding automation tool were tightly coupled. Given-When-Then structure allowed third-party vendors to support this format easily without committing too much to a specific automation solution. An ecosystem of tools evolved around that format, including support for syntax highlighting and refactoring in popular IDEs, and lots of small utilities for populating and publishing examples. This helped significantly with developer productivity compared to other tools, which tended to be more tightly integrated around a single product.

Another reason for the popularity of Given-When-Then might be that it allowed testers and developers to share the burden of test automation more than with traditional test tools. Developers could provide basic automation components, and testers could reuse and combine them by editing text files. Although this approach is arguably better than isolating the work of testers and developers, it doesn’t lead anywhere close to the results of driving development with examples, and it is a common source of confusion. Many teams that never experienced good collaborative analysis based on examples confuse Behaviour Driven Development with test automation based on Given-When-Then. Seb Rose, author of BDD Books series, says: “I see far more people using G/W/T for test automation than to support BDD/SbE. And the BDD TLA [three letter acronym] has become synonymous in the industry with G/W/T powered tests - our BDDs are broken”.

The formulaic aspect of Given-When-Then made it easy to develop tooling support, but it also made the format prone to abuse. Tom Roden, partner at Neuri Consulting, says: “I think people latch onto G/W/T because it gives comfort in a formula, relying on or believing that the formula will automatically yield a good result, like the Connextra user story template. We look for something easy to save time, especially with many other things to worry about. This breeds hope in magic formulas, whereas in reality they are just a lightweight structure to stimulate good thinking, not an end in themselves.”

Tooling has stabilised

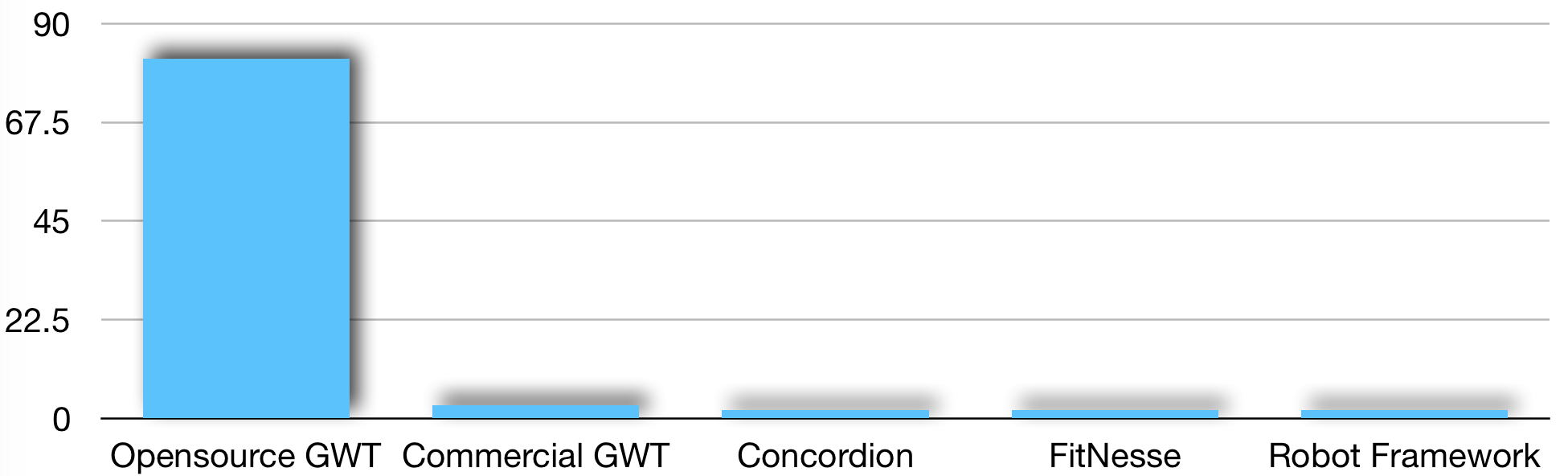

By the time I started working on the Specification by Example book, the technique moved from innovators to early adopters on Geofrey Moore’s scale. Tools such as Cucumber and SpecFlow emerged with better IDE integration, focusing more on developer productivity than on figuring out the basics of the process. Because Given-When-Then became so ubiquitous, it’s no surprise that the tools around that format are the most popular.

| What kind of tool are you using for automating testing based on examples? | |

|---|---|

| Opensource based on given-when-then (Cucumber, Specflow,…) | 82% |

| Commercial tooling based on given-when-then | 3% |

| Concordion | 2% |

| FitNesse | 2% |

| Robot Framework | 2% |

The lack of tool diversity might be surprising at first. I think that more tools aren’t available because Specification by Example fundamentally isn’t a tooling problem. Once you have the right examples any tool works, and if you don’t have the right examples then no tool will save you. The currently popular tools seem to do the trick for the most common use cases, and teams tend to develop custom utilities for more specific domains. (For example, I built Appraise to do visual Specification by Example for layouts and look & feel on MindMup).

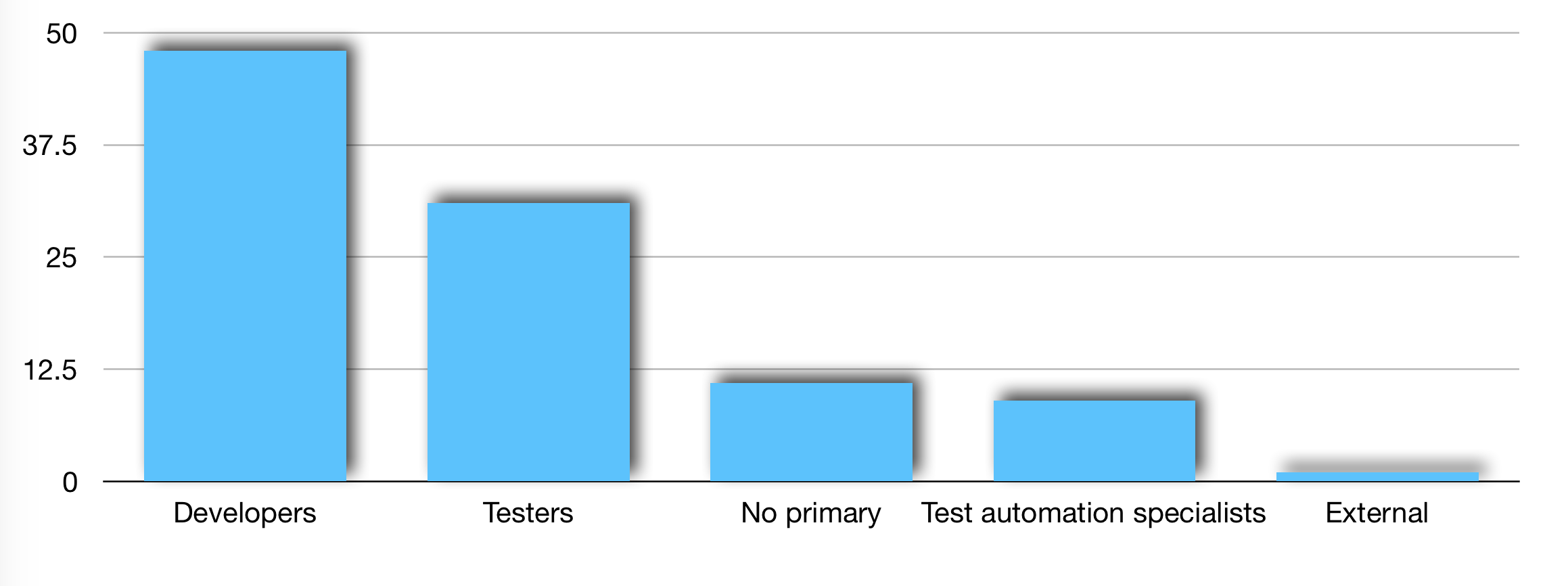

All the early tools for automating testing based on examples were open-source. With rising popularity of the process, commercial tool vendors got into the game. Still, the share for commercial tools in this space is miniscule. One reason for that might be that commercial testing tool vendors used to sell to test managers and test specialists, and mostly focused on testing as an isolated activity. Testers are not the primary users of automation tools in cross-functional teams, as the next group of answers clearly shows.

| Who is primarily responsible for automating testing based on the examples? | |

|---|---|

| Developers who implement the related feature | 48% |

| Testers inside the team | 31% |

| Any delivery team member - nobody is primarily responsible | 11% |

| Test automation specialists outside the delivery team but in the same organisation | 9% |

| Someone external/outsourced/specialist testing organisation | 1% |

With Specification by Example, cross-role work becomes a lot more important than siloed testing. Business people, developers and analysts shy away from using tester-oriented tools. For analysts and business users such tools tend to be too complicated and technical. For developers, they tend to be too limiting and not integrated well into other developer tools. As a result, people outside the testing profession do not tend to have brand awareness or product knowledge about commercial testing tools, and product managers for those tools do not tend to understand agile development. For a few years, traditional test tool vendors tried unsuccessfully to get into the agile game by just slapping buzzwords or ‘agile templates’ to their products, without addressing the real issue of cross-role collaboration.

Interestingly, things seem to be moving in a different direction now. The most popular Given-When-Then tools for Java and .Net were bought by large companies last year. SmartBear acquired Cucumber and Tricentis bought SpecFlow. It will be very interesting to see what comes out of those acquisitions over the next few years, and whether currently popular solutions will just be swallowed by traditional test tools, or whether they will help the vendors reinvent themselves for a new market. Matthias Rapp, General Manager of SpecFlow, said about this: “Tricentis has historically been associated with the QA/testing professional. With SpecFlow we want to set out for new endeavours by promoting the beauty of BDD and examples while providing tooling early on to the entire agile team. Since SpecFlow is independently run, we will keep our strong focus on open source and the BDD community. With the help of Tricentis we are now able to drive our vision forward faster. Which is why we were able to convert for example SpecFlow+ LivingDoc into a free offering.”

Moore’s famous Chasm between early adopters and early majority requires appealing to people who want convenience and full solutions. Although IDE support for editing and managing Given-When-Then files is reasonably good now, I think tool vendors still have a long way to go to provide seamless integration with the rest of the ecosystem. I will come back to this later.

Note that the percentages in the tables in this section only relate to people that actually automate testing based on examples. Because of that, the share for tools may seem higher than the share for the corresponding format of examples from the previous section. Which brings me to the first big surprising conclusion.

Collaboration won over testing

Around 2007 and 2008, Scrum had not yet won the marketing game, but it was pretty close. Although XP started disappearing from sight, many XP ideas had actually crossed into the mainstream by then. Automated unit testing, continuous integration and short iterative cycles became widely accepted. Most of the iterative work was, however, limited to developer activities. Ideas from XP Explained that involved other roles were a lot more difficult for the industry to swallow. Business analysis and testing were often outside the delivery team. Dan North and Martin Fowler talked about the Yawning Crevasse of Doom at QCon London 2007, suggesting that analysts should not be translating between business and development, but facilitating better direct communication. They used a powerful analogy suggesting that modern software development required bridge builders and not ferrymen. Heavily influenced by that, and ongoing client work where testers and analysts started collaborating on examples as a way to break down barriers, I wrote Bridging the Communication Gap. Around the same time, Lisa Crispin and Janet Gregory wrote the book on Agile Testing, promoting collaboration across roles. Still, ten years ago, the idea of delegating specialist analysis or testing work across the whole team was dismissed by most people as something impossible, impractical or even heretical.

Specification by Example, the book, came out as a result of my frustration with conference presenters who claimed that collaborative analysis and testing could not possibly work “in the real world” (for another result of that same frustration that involved a bit more alcohol, see also the story of the Agile Testing Days unicorns). Early adopters were blurring role boundaries with great results, and I wanted to document how that process works in the real world, so I collected case studies. Although most of the stories from the book ended up involving heavy test automation, a recurring message from many interviews was that the conversations leading to examples were often more valuable than the resulting tests. In the book, I documented a flow of seven common steps from a business goal to an automated test that can act as self-checking documentation. I also proposed that the early part of that flow (called ‘specifying collaboratively’ in the book) might be extremely valuable even for teams that do not proceed to the later parts.

Cross-role information sharing, discovery and collaborative modelling were nice side-effects of Customer Tests in XP, but there was always a strong focus on the tests. As the community evolved cross-role techniques to identify, capture and discuss examples in order to better define the test cases, it turned out that those facilitation and collaboration methods had a lot of value on their own. Dan North, of course, spotted the changing dynamic years before everyone else, and started rephrasing the process in customer conversations to move the focus away from testing, coining the name Behaviour Driven Development. (Fun fact: the original name for my book was Software by Example. As we discussed the initial research results, the book publisher convinced me to rename the book, since collaborative specifications might be the most valuable part).

Since the book was published, I promoted the message that ‘specifying collaboratively’ is the key to the process through conference talks and client engagements. Many other people had similar realisations, and tried hard to push the community away from the focus on automated tests towards having more effective conversations. Liz Keogh nicely explained it as “having conversations is more important than capturing conversations is more important than automating conversations.” Craig Larman and Bas Vodde wrote about how ‘the focus of customer-facing tests changes from traditional testing to collaboratively clarifying the requirements’ in Practices for Scaling Lean and Agile. I’m very glad to say that in 2020, the message seems to have been received.

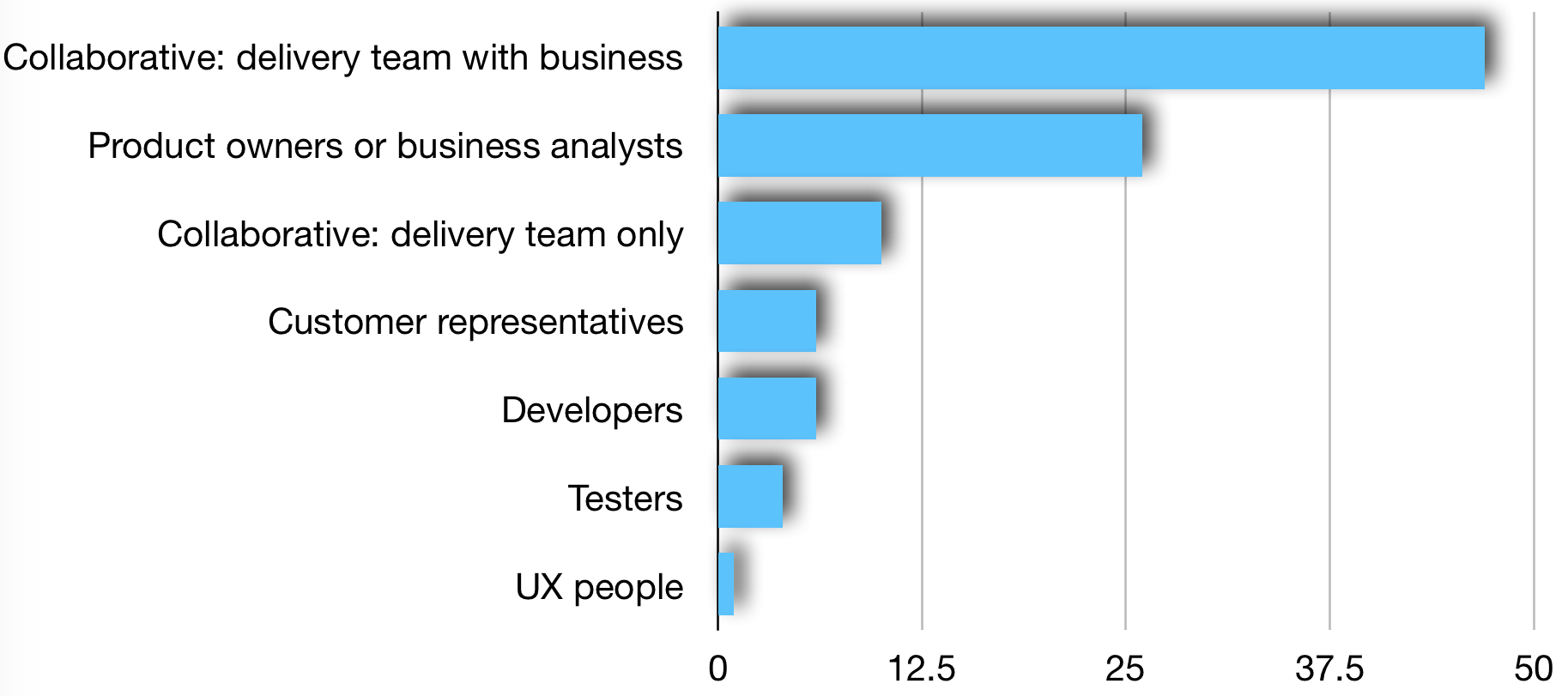

| Who is primarily responsible for defining the acceptance criteria? | |

|---|---|

| Collaborative: Delivery team together with business representatives | 47% |

| Product owners or business analysts (from the delivery team) | 26% |

| Collaborative: Whole delivery team (without business representatives) | 10% |

| Customer/Business representatives/sponsors (outside delivery team) | 6% |

| Developers | 6% |

| Testers | 4% |

| UX people | 1% |

The majority of the respondents define acceptance criteria for their work collaboratively. About half of them engage business representatives directly, where around 10% organise the collaboration without business representatives, then engage them later. This engagement can take several forms:

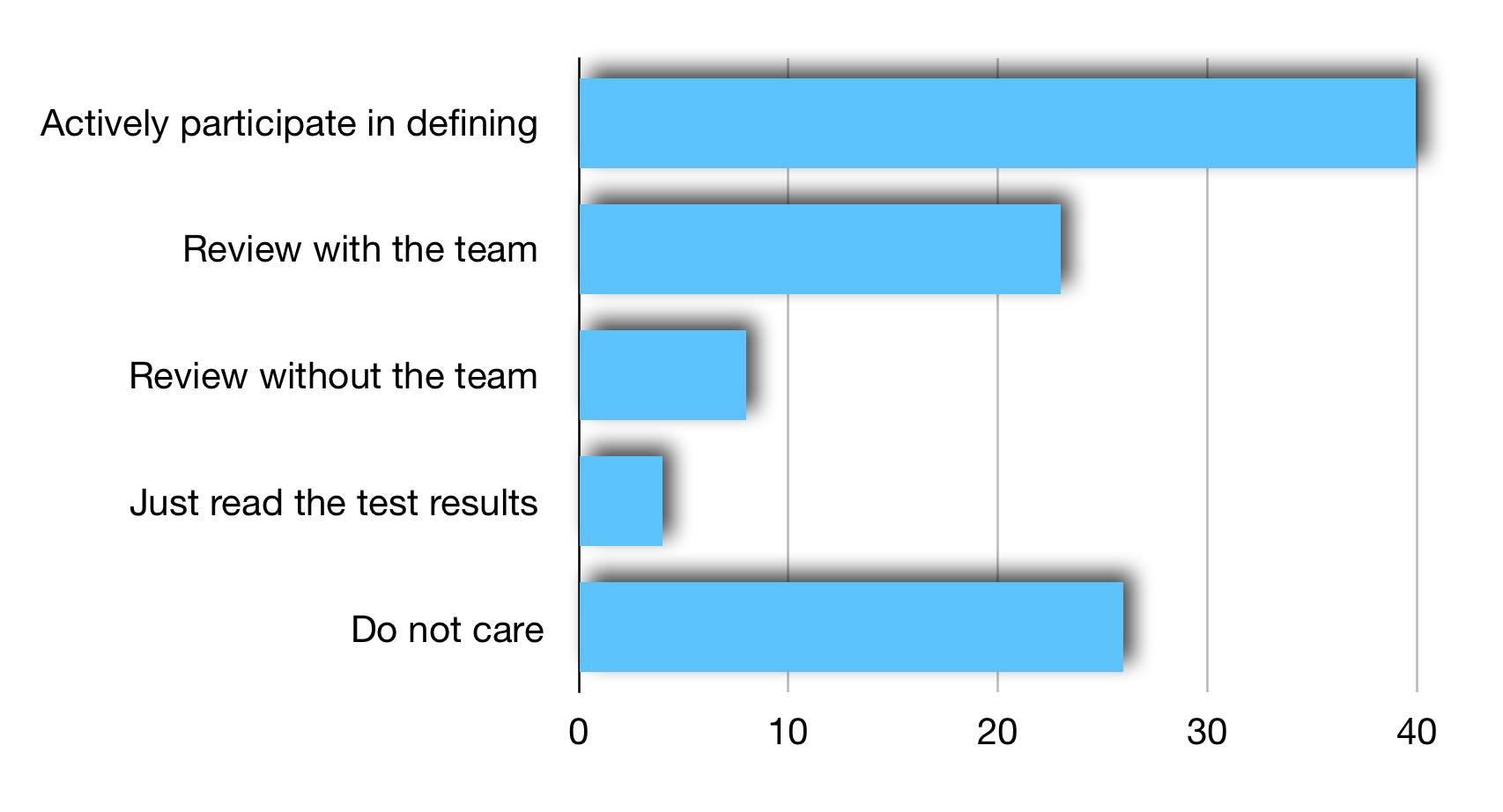

| How do business representatives engage with examples in specifications or tests? | |

|---|---|

| Actively participate in defining | 40% |

| They mostly do not care about the examples | 26% |

| Do not participate in defining, but review with the team | 23% |

| Do not participate in defining, but review without the team | 8% |

| Just read the test results | 4% |

For about one quarter of the respondents, business representatives do not care about examples in specifications and tests at all, which seems like a missed opportunity. On the other hand, business representatives engage in some form with examples for 71% of the surveyed people, which is quite encouraging. Hopefully, this trend will continue in the future.

FitNesse and Concordion tried to make documentation based on examples more appealing to business representatives, by providing collaborative editing, supporting images, rich text formatting and cross linking. The popular tools today do not really try to do that. Relish was an attempt in that direction, but it was abandoned in favour of Cucumber Pro, which never took off. It’s now being merged with another tool, which is trying to address a similar problem. With the growing popularity of the Given-When-Then syntax and the tools that supported it, the community gained a lot in terms of developer productivity, but I feel we lost something in being able to create good documentation.

The simplicity of the Given-When-Then format, which allowed it to win over other forms of examples and gain tooling support, might have also been the limiting factor for collaboration. Gáspár Nagy, BDD trainer at Spec Solutions, suggests that “The plain-text file format of feature files is not strong enough to store all the information that is required for collaboration (e.g. where would you store structured discussion on scenario steps). So a collaboration tool would need to store extra information somewhere but still maintain the connection to the feature files. For making such a tool, complex problems have to be solved, like error and conflict handling, branching/merging of parallel edits and security concerns (how does the tool save anything into your source control). For the tool vendors, these problems make it costly to implement anything conveniently usable. As the user base and the need grows, the income might compensate this and more tools will be available… I think the Cucumber/SpecFlow acquisitions are steps towards this. Cucumber Studio (the merger of Cucumber Pro and Hiptest) is something that looks promising.”

It will be interesting to see if the tooling vendors start supporting a wider audience through better document formatting and easier linking, especially because there seems to be a big gap in what the tools offer, and what a huge number of respondents need, which brings me to the second big surprise.

About a third of people who use examples do not automate them

For roughly one third of the people who use examples as acceptance criteria, automating testing based on examples wasn’t worth the trouble.

| Do you use a tool to automate testing based on the examples from specifications? | |

|---|---|

| Yes | 71% |

| No | 29% |

While this means that as a community we succeeded in driving home the message that conversations are more important than test automation, this also means that about one third of the teams miss out on the potential benefits of examples to create high quality, self-checking documentation. The final of the seven steps from the Specification by Example book was to use examples as a source of truth about the system, as documentation to support product evolution, for onboarding new team members and for evaluating proposed changes. When examples are automatically tested, specifications based on those examples can be easily kept up-to-date, so this kind of documentation evolves with the underlying software system easily. David Evans coined the term Living Documentation to describe this characteristic of automated examples just in time to include it in my book (I had a much worse, less catchy name), and Cyrille Martraire later evolved that concept beyond examples and wrote a great book about it. Living Documentation is incredibly powerful for long-term software evolution and maintenance. However, it relies on a quick and cheap way of tracking which examples are still valid, and which need to change. This assumes automation for anything but the simplest software products.

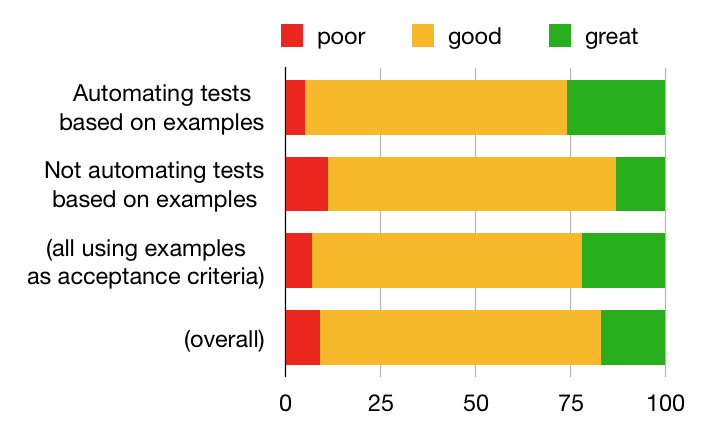

Automation in this case strongly correlates to improvements in the self-reported product quality estimate. Looking only at survey participants that use examples as acceptance criteria, and dividing them into two groups based on whether those examples led to automated tests or not, the percentage of the group with automation evaluating their software as ‘great’ was 26%, double the number for the same segment in the group of people who just use examples as acceptance criteria but do not automate them. The segment of people who rated their software as ‘poor’ was 5% in the group that automates examples, and more than double (11%) in the group that does not automate tests based on examples.

| Do you use a tool to automate testing based on the examples from specifications? | Great | Good | Poor |

|---|---|---|---|

| Yes | 26% | 69% | 5% |

| No | 13% | 76% | 11% |

| (those using examples as acceptance criteria) | 22% | 71% | 7% |

| (overall) | 17% | 74% | 9% |

Task tracking tools won at the expense of good documentation

The original promise of Living Documentation was creating a single source of truth. In the book, I documented many cases of teams using examples to build documents that can be used both as specifications for upcoming work, as acceptance tests once that work is done, and as regression tests and system documentation in the future. When a single document represents both a specification and a test, then it’s impossible to forget updating one without the other. Used properly, this aspect of Specification by Example becomes incredibly powerful. Craig Larman and Bas Vodde documented how this approach simplified regulatory compliance in a nuclear-power plant simulation project, and I’ve seen the same thing with several consulting clients in the financial services industry. Dave Farley recently evolved this idea into Continuous Compliance. Many case studies in the Specification by Example book, even from teams that didn’t need such strict compliance, suggest how having a single source of truth can significantly speed up future work. Yet, most of the respondents from my recent research don’t come even close to these benefits.

Christian Hassa, Product Manager of SpecFlow, said: “We believe the concept of Living Documentation is a yet unrealized key benefit for large organizations. Making automated examples more accessible to business stakeholders and connecting with information in already established tools are key enablers for broader adoption.”

With a single source of truth, there’s always a problem of allowing different categories of users to access the information easily. Developers prefer command line tools and plain text. Other people on the team generally don’t like that way of working, and often do not have the adequate tools or skills to even try it. Most of the Specification by Example tools try to solve this problem in the same way: describe examples with some form of text file that can be stored into a version control system, and provide multiple views to it. Everyone can then in theory work from the same source, but not necessarily access the information in the same place. Accessing version control systems is a big barrier for business users and sometimes for less technical team members, so FitNesse tried solving it with a wiki-based web interface. Concordion tried solving it by working from HTML files that could be published as a web site. Some early commercial tools, such as Green Pepper by Pyxis Tech, tried going in the other direction - letting users store examples in a popular wiki system such as Confluence, and run them from there instead from the version control system. Green Pepper was quite impressive when I wrote the book, but since then it seems to have been abandoned. There was an attempt by Novatec to restart the tool under the name LivingDoc but it did not gain wide adoption.

With the current breed of Given-When-Then tools, the most effective solution seems to be converting the specification files into something nicer and easier to read (for example replacing pipe characters with actual table formatting), and then publishing a read-only version somewhere outside the version control system. For example, SpecFlow+ has a plug-in to view specification files from TFS/Azure DevOps (VSTS). Relish and Pickles both tried to facilitate publishing Given-When-Then text files into nice web pages, but both projects were abandoned.

One of the reasons why such attempts did not gain wide adoption might be the restrictions of the ubiquitous Given-When-Then format. Early tools such as FitNesse and Concordion allowed teams to move beyond plain text, but with the recent focus mostly on Given-When-Then, the trend reversed. If they want to support a wider use of examples as documentation, tool vendors may need to move beyond that format. Christian Hassa said “While abstract rules and model specifications can be already described in Gherkin feature files, few teams make use of this due to the limitations of plain text. This yields feature files reduced to automation scripts buried in source control where the higher level context is missing. SpecFlow+ publishes feature files on Azure DevOps with MarkDown to provide more context with images and formatting. The examples become meaningful specification for business which can be linked with other documentation.”

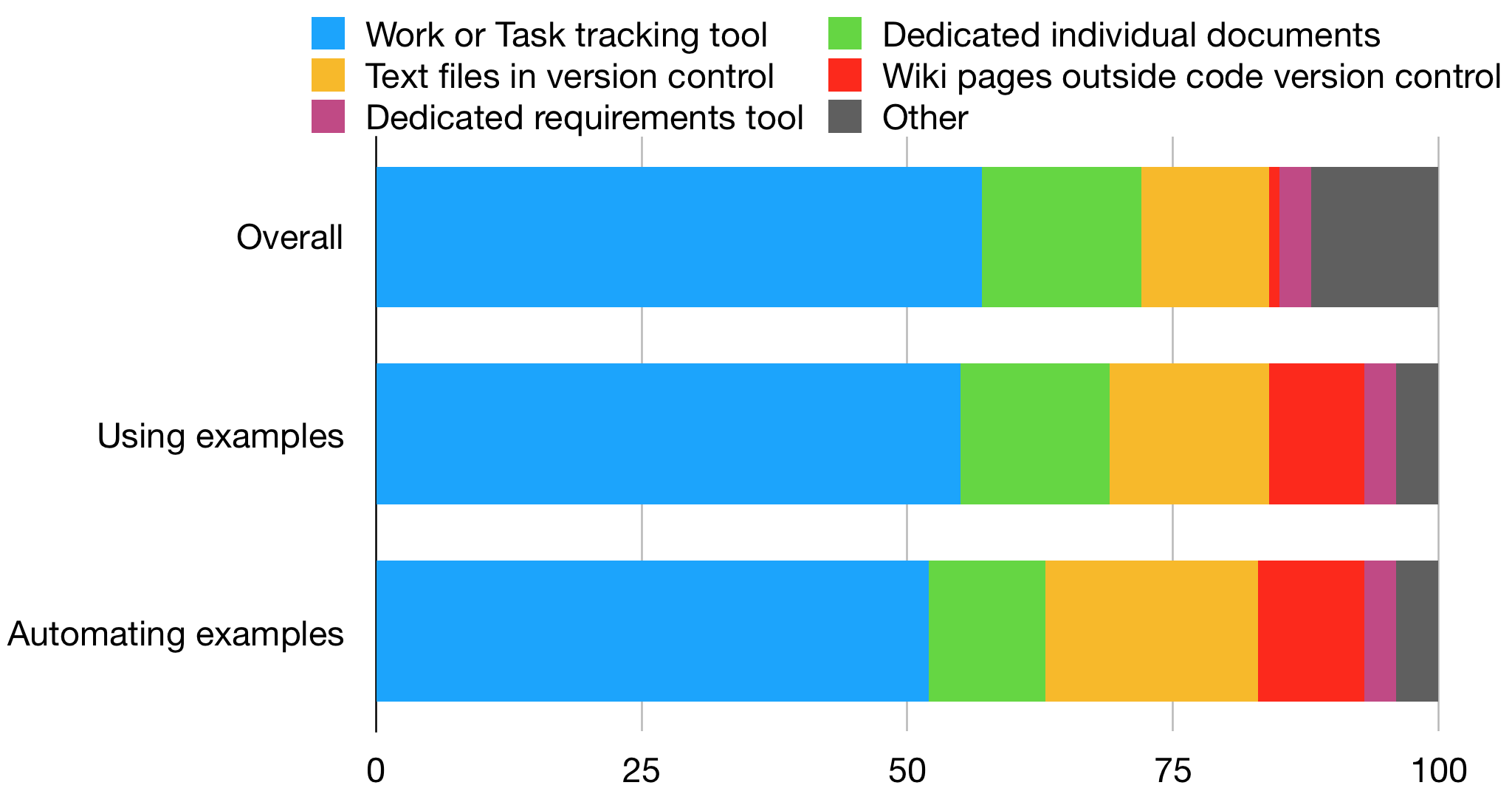

The fact that the rest of the tooling ecosystem matured so much but that the publishing part did not follow always puzzled me, but the responses from the research shed a completely different light on the situation. Asked what they consider the “primary source of truth for work requirements”, more than half of the respondents choose a task tracking tool. Ubiquitous Jira and it’s lesser relatives won the mindshare much better than tools that tried to create good living documentation. I intentionally asked that question broadly, to check if people actually consider that their spec files are truly specifications.

| What is the primary source of truth for work requirements? | Overall | Using examples | Automating examples |

|---|---|---|---|

| Work or Task tracking tool (eg Jira) | 57% | 55% | 52% |

| Dedicated individual documents (eg Word files) | 15% | 14% | 11% |

| Text files in version control (eg feature files or fitnesse pages committed to git) | 12% | 15% | 20% |

| Wiki pages outside code version control systems (eg Confluence) | 1% | 9% | 10% |

| Dedicated requirements management tool (eg RISE) | 3% | 3% | 3% |

Overall, 57% respondents choose a task tracking tool as the primary source of truth. The second most popular category, with 15% overall, were individual dedicated documents, usually preferred by traditional business analysts or third-party clients. Text files in version control, the holy grail of what was promised in the original Specification by Example book, ended up in the third place, used only by 12% of the respondents. So it’s safe to say that the idea of specifications and tests in a single document didn’t really work out as expected over the last 10 years.

The situation changes slightly when we restrict the group to only those who actually used examples in acceptance criteria, in which case text files in version control become more popular than using individual documents. The share of task tracking tools does not decrease a lot. Restricting this further to people who also automate tests based on examples, text files in version control systems increase to twenty percent, but again mostly at the expense of other types of documents. Task tracking tools are still on top, by a huge margin.

Even among teams that have the technical capability to create a single source of truth, most choose not to do it. This could mean that task tracking tools became the standard expected repository of requirements, and that there’s not much point trying to fight it. It could also mean that the convenience of using task tracking tools to store whatever teams perceive are their requirements outweighs the benefits of having a single source of truth. Matthias Rapp said about this: “Work item/task management tools (Jira, Azure DevOps, …) are the leading systems that drive any other downstream development task these days. With SpecFlow we want to bring BDD smoothly into these workflows.”

Gáspár Nagy suggested that “If you have your work items (stories, epics, features, bugs) all in Jira or Azure DevOps you will less likely to jump to an external system to check the detailed requirements. Cross-linking causes trouble too. I know about teams who copy-paste the scenarios to Jira tickets to have everything in the same place. For me, this does not necessarily mean that all collaboration problems have to be solved within the ALM tools, but integration with this tools is an important concern.”

Note that I’ve intentionally asked about the primary source of truth, since with the current tooling it is very difficult to create a reliable single source of truth with task tracking tools. Structuring documents around work items creates a huge problem for maintaining good documentation in the long term. Work items tend to explain the sequence of work, not the current state of the system. Janet Gregory, co-author of the Agile Testing book series, says “I spend a lot of time telling people that stories are throw-away artifacts. They offer zero help after the story has been implemented and in production.” Christian Hassa had similar thoughts on this problem: “Gherkin scenarios have two main use cases: describing a desired system change and describing the current expected system behavior. Gherkin scenarios often end-up in JIRA, because they are written when a new requirement is specified. However, JIRA tickets of small user stories are not ideal to keep an overview of current system behavior. With SpecFlow+ and SpecMap we want to use mapping techniques like use story mapping to build a higher level view for Gherkin feature files.”

Getting a good overview of the up-to-date functionality is difficult with current tooling around task tracking, and closing this gap is probably where we’ll see tool vendors try to work in the future.

Gáspár Nagy created a tool called SpecSync that can synchronize scenarios to test case work items in Azure DevOps.

Since Cucumber got bought by SmartBear, the company had more resources to invest in solving the problem of providing good living documentation. Matt Wynne, co-founder of Cucumber Ltd, now BDD Advocate at SmartBear, says: “We now have product teams focussed on the mission of enabling every software team on the planet to experience the joys of BDD: one working inside Jira, and one working on a stand-alone product (CucumberStudio) which is the successor of Cucumber Pro”. Cucumber for Jira takes the similar approach to SpecSync and publishes living documentation from Cucumber files directly in Jira. Since the acquisition, the Cucumber team has been incorporating Cucumber Pro features into HipTest (rebranded CucumberStudio), which can also link Jira tickets to living documentation.

Moving beyond just basic synchronisation, tools may need to support more complex workflows to truly become valuable to a wider group of roles, and there’s some early development in this area already. Christian Hassa said: “SpecFlow+ connects plain text Gherkin under source control to already established collaboration mechanisms in Azure DevOps: Features files and individual scenarios can be linked to work items, where business stakeholders provide feedback. Specification changes are captured as follow-up tasks for that work item, and updates to the feature files under source control remain fully transparent to all stakeholders through the linked scenarios.”

Looking forward to the next ten years

Although the numbers related to collaborative analysis look surprisingly promising, many teams still do not get the full engagement from customer representatives as they should. As a community, we still have to work on promoting the message that collaboration is critical, and that software teams need bridge builders instead of ferrymen, as North and Fowler put it.

I spent the better part of the last ten years telling people to focus more on conversations than on automation, but now I find myself having to praise the benefits of automating checks to people who forgot them. If you have good discussions on examples but don’t automate tests based on them, consider perhaps investigating one of the automation tools. The tooling infrastructure improved and matured quite a lot over the last decade.

My guess is that the big challenge related to tooling over the next 10 years will be in integrating better with Jira and its siblings. Somehow closing the loop so that teams that prefer to see information in task tracking tools get the benefits of living documentation will be critical. Although none of the community attempts in this space so far gained wide adoption, there are several new efforts underway that look very promising. With strong financial support from two large companies, I expect the situation to improve significantly in the future.

header photo: ThisisEngineering RAEng on Unsplash